Welcome to EPHE 487/591: Biomedical Statistics!

Here you will find everything you need to successfully complete the course.

Here you will find everything you need to successfully complete the course.

Lesson 1. Introduction

Topic 1.1 What to measure?

Field (pages 1-12)

Key terms to know:

Hypothesis

Independent Variable

Dependent Variable

Predictor Variable

Outcome Variable

Level of Measurement

Categorical Variable

Binary Variable

Nominal Variable

Ordinal Variable

Continuous Variable

Interval Variable

Ratio Variable

Discrete Variable

Measurement Error

Validity

Reliability

Criterion Validity

Content Validity

Test-Retest Reliability

Topic 1.2 How to measure it.

Field (pages 13 -17)

Key terms to know:

Correlational Research

Experimental Research

Ecological Validity

Confounding Variables

Between Subject Designs / Independent Group Designs

Within Subject Designs / Repeated Measures Designs

Unsystematic Variation

Systematic Variation

Randomization

Counterbalancing

Assignment 1

1. Install R and R Studio HERE

2. Install JASP HERE

Note on Macs I believe you need to install xQuartz to get JASP to work.

Field (pages 1-12)

Key terms to know:

Hypothesis

Independent Variable

Dependent Variable

Predictor Variable

Outcome Variable

Level of Measurement

Categorical Variable

Binary Variable

Nominal Variable

Ordinal Variable

Continuous Variable

Interval Variable

Ratio Variable

Discrete Variable

Measurement Error

Validity

Reliability

Criterion Validity

Content Validity

Test-Retest Reliability

Topic 1.2 How to measure it.

Field (pages 13 -17)

Key terms to know:

Correlational Research

Experimental Research

Ecological Validity

Confounding Variables

Between Subject Designs / Independent Group Designs

Within Subject Designs / Repeated Measures Designs

Unsystematic Variation

Systematic Variation

Randomization

Counterbalancing

Assignment 1

1. Install R and R Studio HERE

2. Install JASP HERE

Note on Macs I believe you need to install xQuartz to get JASP to work.

Lesson 2. Analyzing Data

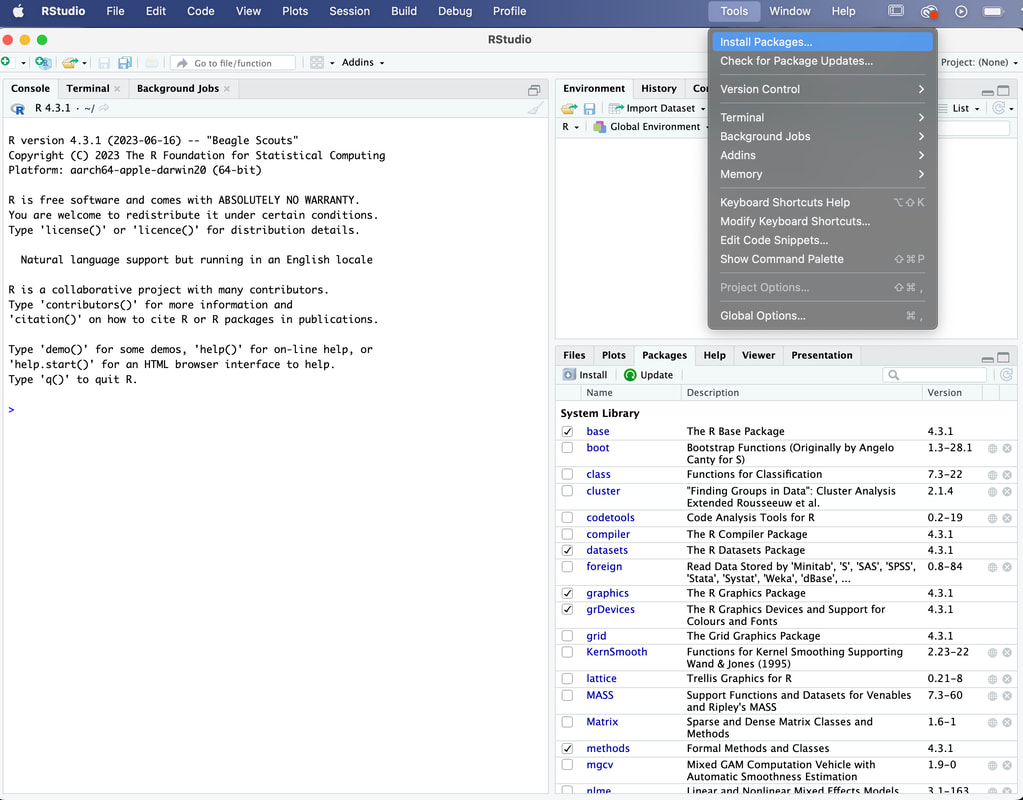

I personally think it is a lot easier to use R Studio to work with R. R Studio is a shell that visualizes a lot of things that are typically hidden in such as variables. It also provides a menu driven selection of tools that R users typically have to execute using R code. This tutorial assumes you have R Studio installed.

Once R Studio is installed I would recommend creating a directory for your working R files. Do this now.

Open R Studio (note, R automatically loads once R studio is opened). Take some times and explore the menu items and the workspace.

IMPORTANT! 90% of more of the mistakes I see students make are typographical - R is an exact programming language! You must have your syntax perfect or there will be an error!

Using Session --> Set Working Directory --> Choose Directory set the directory to your R directory. Note, you will see the command for changing the working directory in your command window after using the Session drop down menu. To do this from the command prompt you would use setwd("~/R") where whatever is in quotes is your path to your R directory.

Installing Packages

One of the reasons R is so powerful is that users can write their own "packages" which add tools to R. You can download these packages for free.

To install a package, go to Tools - Install Packages.

Once R Studio is installed I would recommend creating a directory for your working R files. Do this now.

Open R Studio (note, R automatically loads once R studio is opened). Take some times and explore the menu items and the workspace.

IMPORTANT! 90% of more of the mistakes I see students make are typographical - R is an exact programming language! You must have your syntax perfect or there will be an error!

Using Session --> Set Working Directory --> Choose Directory set the directory to your R directory. Note, you will see the command for changing the working directory in your command window after using the Session drop down menu. To do this from the command prompt you would use setwd("~/R") where whatever is in quotes is your path to your R directory.

Installing Packages

One of the reasons R is so powerful is that users can write their own "packages" which add tools to R. You can download these packages for free.

To install a package, go to Tools - Install Packages.

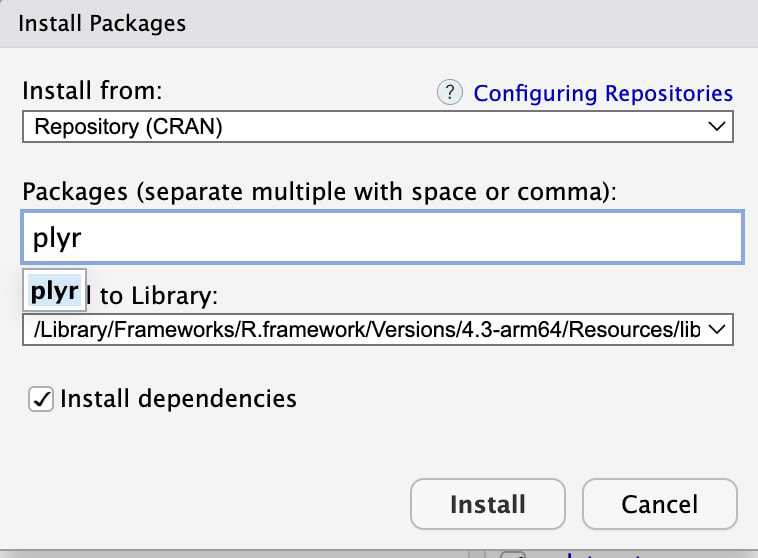

You need to know the name of the package you want, type in plyr and click on Install. Make sure Install Dependencies is checked.

If you click on the Packages tab as seen above, you can check to see that plyr is installed. You can see the tab on the first image above.

At the command prompt type library("plyr"). This tells R Studio to load the plyr library for use. You will need to do this each time you use a library, although you can set R to load libraries automatically. There are reasons for not doing this - we will get to that later.

Okay, the next thing we will do today is load some data into R.

There are a variety of ways to do this of course, but I will focus on the read.table command. We will work with the file data.txt. Download it now. I would encourage you to open this file in a text editor or EXCEL to take a look at it.

Make sure you put the data.txt file in your Working Directory.

Now, at the command prompt, ">" type the following command: myData = read.table("data.txt").

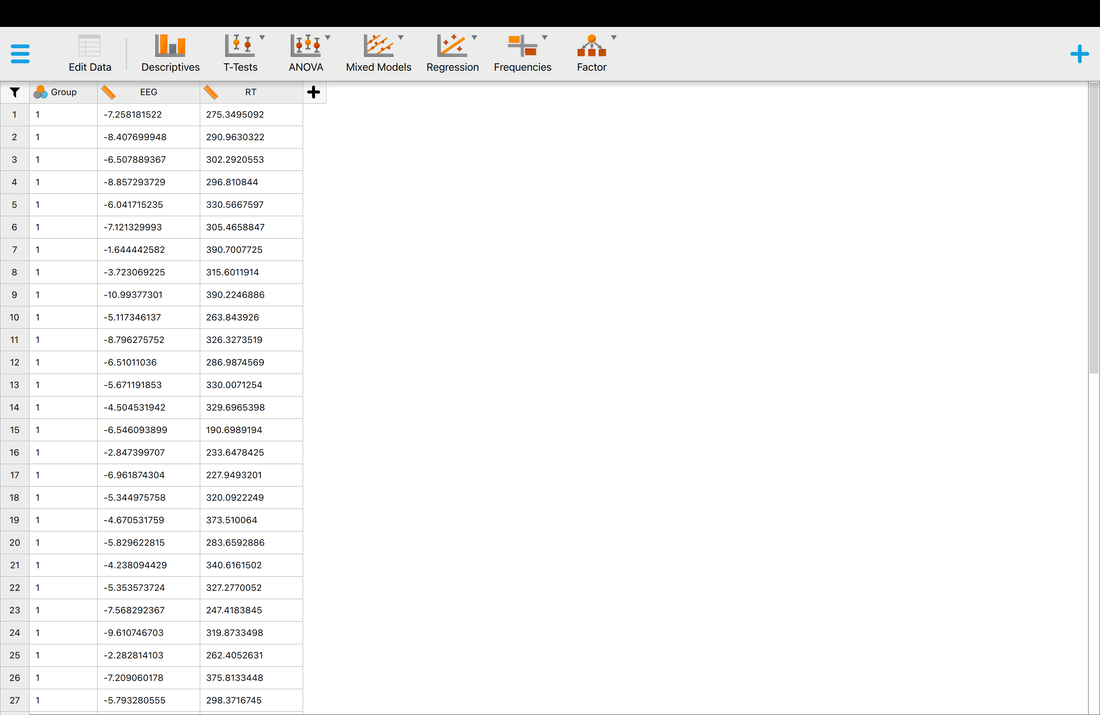

You should now see your data in R Studio as a variable called myData. You can either use the command View(myData) or click on the Environment tab and look under Data. Then click on myData to see your data.

We have loaded the data into a structure called a table (hence the command read.table). In R tables are used to hold statistical data. Note that the columns have labels V1, V2, and V3. These are the default column labels in R. To see the first column for instance you would type myData$V3 at the command prompt (note the $ - this tells R that you want the column V3 in the table myData). Do this now. You should see the numbers in the third column with numbers in [ ] brackets which provide the index of the first number in the row. For example, the second number in the third column would be identified as myData$V3[2].

In research terms, we typically set up our data in the form of a table that has columns for independent variables (things we manipulate) and dependent variables (things we measure).

You should see in myData that has a column(s) for the independent variable, here coded as a 1 or 2 and column(s) with data, were negative numbers in column 2 ranging from approximately -2 to -12 and positive numbers ranging from 200 to 500 in column 3. Here, the independent numbers of 1 and 2 represent two groups and the other numbers are just data (an EEG score in column 2 and reaction time in column 3).

Sometimes it is easier to rename the columns so they are meaningful to you. Use the following command to do this:

names(myData) = c("group","eeg","rt")

Note, the c command essentially tells R that the following stuff in brackets is a list of items. I think the names command does not need to be explained.

The renaming here is to specify in words a column label for the independent variable (group) and the two dependent variables (eeg, rt).

One of the reasons the table structure is so powerful is that you can use logical indexing. For example, to get all of the rt values for participants with a group code of 2 you just need to simply type myData$rt[myData$group==2].

The last thing we will do is define our independent variable group as a factor. This is important for doing statistical analysis as it tells R that group is an independent variable (i.e., a factor) and not data. To do this simply use:

myData$group = factor(myData$group). myData will look the same but R will know now that myData$group is an independent variable and not a dependent variable.

To see how to use factors, we can run a t-test for now - although we will explain t-tests in detail later. To run the t-test use t.test(myData$rt ~ myData$group). With this command you are literally telling R to conduct a t-test examining if there are differences in myData$rt as a function of myData$group. More on this later.

JASP

Now, we will load data into JASP which is considerably easier. One thing to note, in JASP the first row must have Column (variable) names as opposed to just the data. Download this file HERE and compare it to data.txt.

To load the data, first open JASP.

In the top left, click on the three lines and then OPEN --> Computer --> and navigate till you fine dataJASP.txt and select it. You should see the data in the main window like below. This is all we are going to do in JASP for now.

At the command prompt type library("plyr"). This tells R Studio to load the plyr library for use. You will need to do this each time you use a library, although you can set R to load libraries automatically. There are reasons for not doing this - we will get to that later.

Okay, the next thing we will do today is load some data into R.

There are a variety of ways to do this of course, but I will focus on the read.table command. We will work with the file data.txt. Download it now. I would encourage you to open this file in a text editor or EXCEL to take a look at it.

Make sure you put the data.txt file in your Working Directory.

Now, at the command prompt, ">" type the following command: myData = read.table("data.txt").

You should now see your data in R Studio as a variable called myData. You can either use the command View(myData) or click on the Environment tab and look under Data. Then click on myData to see your data.

We have loaded the data into a structure called a table (hence the command read.table). In R tables are used to hold statistical data. Note that the columns have labels V1, V2, and V3. These are the default column labels in R. To see the first column for instance you would type myData$V3 at the command prompt (note the $ - this tells R that you want the column V3 in the table myData). Do this now. You should see the numbers in the third column with numbers in [ ] brackets which provide the index of the first number in the row. For example, the second number in the third column would be identified as myData$V3[2].

In research terms, we typically set up our data in the form of a table that has columns for independent variables (things we manipulate) and dependent variables (things we measure).

You should see in myData that has a column(s) for the independent variable, here coded as a 1 or 2 and column(s) with data, were negative numbers in column 2 ranging from approximately -2 to -12 and positive numbers ranging from 200 to 500 in column 3. Here, the independent numbers of 1 and 2 represent two groups and the other numbers are just data (an EEG score in column 2 and reaction time in column 3).

Sometimes it is easier to rename the columns so they are meaningful to you. Use the following command to do this:

names(myData) = c("group","eeg","rt")

Note, the c command essentially tells R that the following stuff in brackets is a list of items. I think the names command does not need to be explained.

The renaming here is to specify in words a column label for the independent variable (group) and the two dependent variables (eeg, rt).

One of the reasons the table structure is so powerful is that you can use logical indexing. For example, to get all of the rt values for participants with a group code of 2 you just need to simply type myData$rt[myData$group==2].

The last thing we will do is define our independent variable group as a factor. This is important for doing statistical analysis as it tells R that group is an independent variable (i.e., a factor) and not data. To do this simply use:

myData$group = factor(myData$group). myData will look the same but R will know now that myData$group is an independent variable and not a dependent variable.

To see how to use factors, we can run a t-test for now - although we will explain t-tests in detail later. To run the t-test use t.test(myData$rt ~ myData$group). With this command you are literally telling R to conduct a t-test examining if there are differences in myData$rt as a function of myData$group. More on this later.

JASP

Now, we will load data into JASP which is considerably easier. One thing to note, in JASP the first row must have Column (variable) names as opposed to just the data. Download this file HERE and compare it to data.txt.

To load the data, first open JASP.

In the top left, click on the three lines and then OPEN --> Computer --> and navigate till you fine dataJASP.txt and select it. You should see the data in the main window like below. This is all we are going to do in JASP for now.

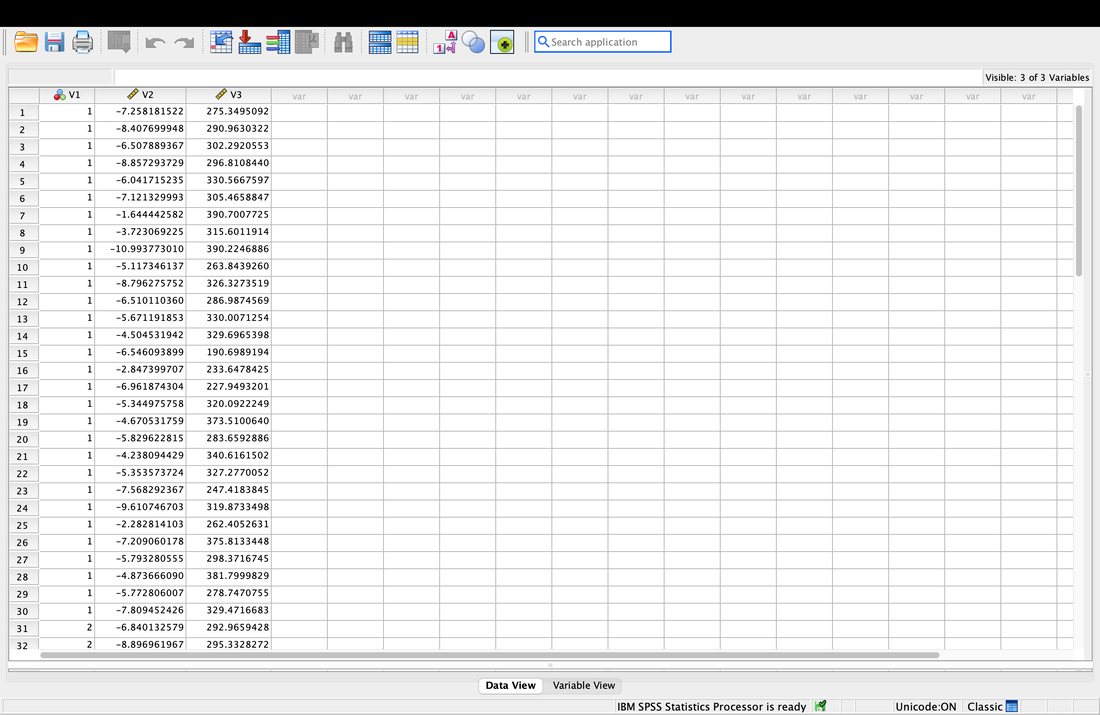

SPSS

Opening data is also easy in SPSS. Open SPSS then select:

New Dataset by double clicking on it.

On the next screen:

File --> Import Data --> Text Data

And select data.txt

An import wizard will pop up. Hit Continue on all subsequent screen until you can hit Done. In brief, these screens allow you to customize the import to match the data you have. Your data is fine just the way it is.

When done, you will see the data in three columns labelled V1, V2, and V3. Note, you could have changed the column names on the import OR you can do it here by selecting Variable View at the bottom. Change the names of the three columns to Group, EEG, and RT.

That is it for SPSS for now.

Assignment 2

1. Review everything you did above and make sure you understand it.

2. In R Studio, ensure you can load the data HERE ('data2.txt') into a variable called moreData and rename the columns as subject, group, and testscore. Make subject and group factors.

3. Question. How do you think column one would be different if this was a within / repeated measures design? (it is currently formatted as a between / independent design).

EXTRA

If you want to push ahead, see if you can run t-tests between the three groups in R Studio. Note - there are three groups. So, you cannot use the ttest command exactly the way we did above, you need to select subsets of the data as we did however.

Assignment 2

1. Review everything you did above and make sure you understand it.

2. In R Studio, ensure you can load the data HERE ('data2.txt') into a variable called moreData and rename the columns as subject, group, and testscore. Make subject and group factors.

3. Question. How do you think column one would be different if this was a within / repeated measures design? (it is currently formatted as a between / independent design).

EXTRA

If you want to push ahead, see if you can run t-tests between the three groups in R Studio. Note - there are three groups. So, you cannot use the ttest command exactly the way we did above, you need to select subsets of the data as we did however.

Lesson 3. Measures of Central Tendency

Reading: Field, Chapters 1 to 3

If you are not familiar with the concepts of the mean, median, and mode watch THIS. Or use Google. These are concepts you should already know...

Let's start by loading the file we used in the last class, data.txt, into a variable called myData and labelling the three columns as group, eeg, and rt .

One of the most important descriptive statistics is the MEAN, or AVERAGE. The MEAN is just a number that represents the average or arithmetic MEAN of the data. But think about what a MEAN really is – it is a representation of the data. If a baseball player has a mean batting percentage of 0.321 it means they contact the ball on average 32.1% of the time. That numbers describes what they can do, on average. If there was no variability, then that number is their ability in a true sense. If the player starts hitting more consistently, the mean goes up. Why? Because you have added a series of scores that push the mean up because they are greater than the previous mean – thus the average score goes up. The reverse is also true if the player starts missing. Never lose sight of the fact that the mean reflects a series of scores and that changes in those scores over time change the mean. We typically call the mean a POINT ESTIMATE of the data.

In R, to calculate the mean simply use: mean(myData$rt). Note, this is the mean of the whole data set independent of group.

Before we move on, it is important that you understand what a mean really is. Of course, it is an average, but what does that mean? (no pun intended!)

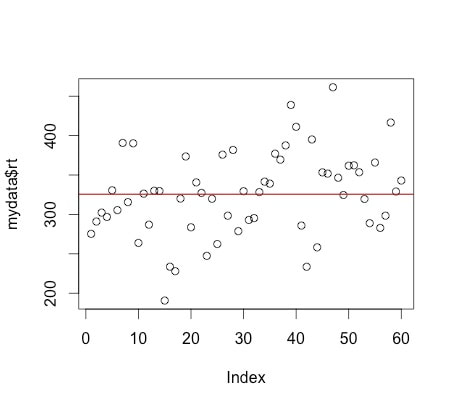

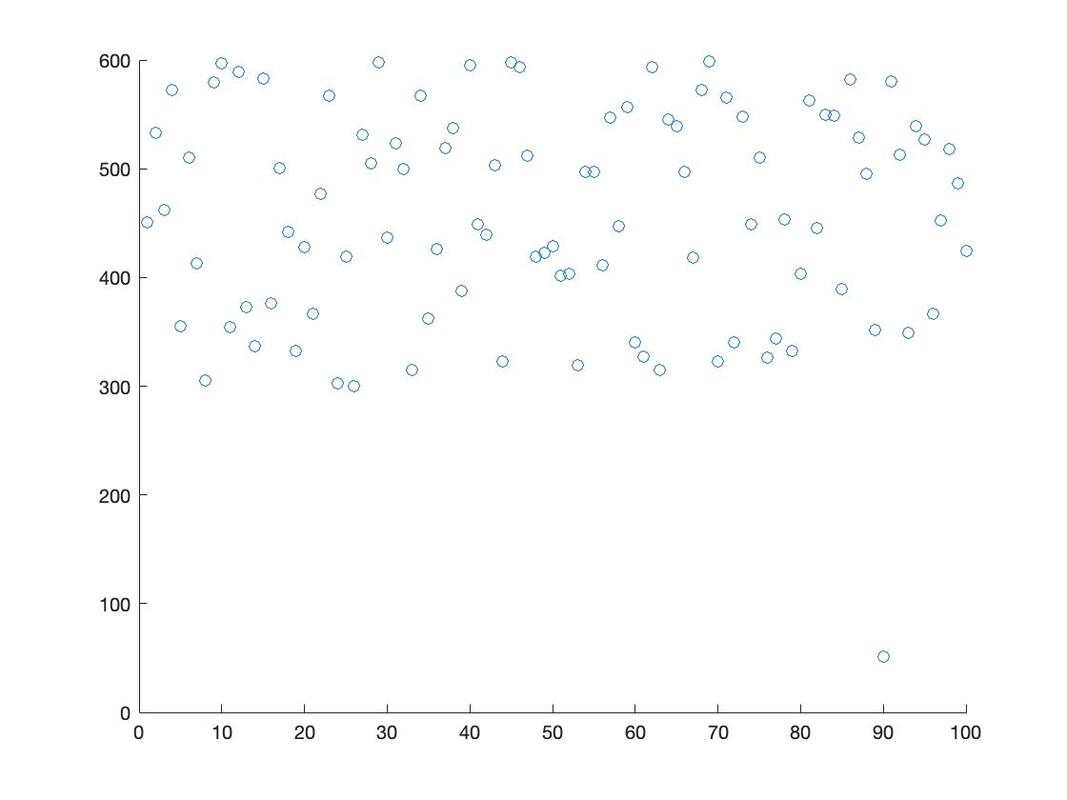

Look at the following plot of myData$rt. (Note, you can do this yourself by using plot(myData$rt).

I have also used abline(a = mean(myData$rt), b = 0, col = "red") to add a red line representing the mean. If you look at the command it specifies a y intercept a which is equal to the mean, a slope b = 0 and makes the line colour red.

If you are not familiar with the concepts of the mean, median, and mode watch THIS. Or use Google. These are concepts you should already know...

Let's start by loading the file we used in the last class, data.txt, into a variable called myData and labelling the three columns as group, eeg, and rt .

One of the most important descriptive statistics is the MEAN, or AVERAGE. The MEAN is just a number that represents the average or arithmetic MEAN of the data. But think about what a MEAN really is – it is a representation of the data. If a baseball player has a mean batting percentage of 0.321 it means they contact the ball on average 32.1% of the time. That numbers describes what they can do, on average. If there was no variability, then that number is their ability in a true sense. If the player starts hitting more consistently, the mean goes up. Why? Because you have added a series of scores that push the mean up because they are greater than the previous mean – thus the average score goes up. The reverse is also true if the player starts missing. Never lose sight of the fact that the mean reflects a series of scores and that changes in those scores over time change the mean. We typically call the mean a POINT ESTIMATE of the data.

In R, to calculate the mean simply use: mean(myData$rt). Note, this is the mean of the whole data set independent of group.

Before we move on, it is important that you understand what a mean really is. Of course, it is an average, but what does that mean? (no pun intended!)

Look at the following plot of myData$rt. (Note, you can do this yourself by using plot(myData$rt).

I have also used abline(a = mean(myData$rt), b = 0, col = "red") to add a red line representing the mean. If you look at the command it specifies a y intercept a which is equal to the mean, a slope b = 0 and makes the line colour red.

Now, what is the mean? Yes, it is an average of your the numbers in myData$rt. BUT, it is also a model for your data. What do we mean by model? The mean value (325.661) represents the data - it is the model of this data. Think of it this way - if you were going to predict the next number in the series of numbers myData$rt, what number would you pick? The best guess would be the mean as it is the average number. So, the mean is a model of the data. You can read more about this notion HERE and HERE.

Okay, now load in a new data file, data3.txt which is HERE, into a data table called income. This file contains 10000 data points, each of which represents the income of a person in a Central American country in US dollars.

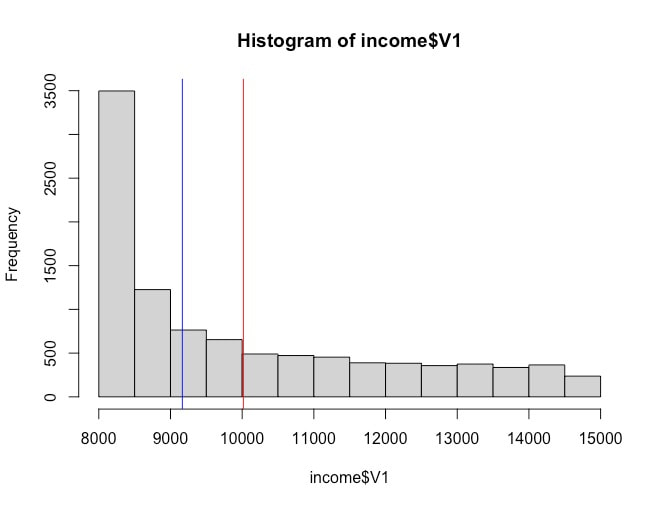

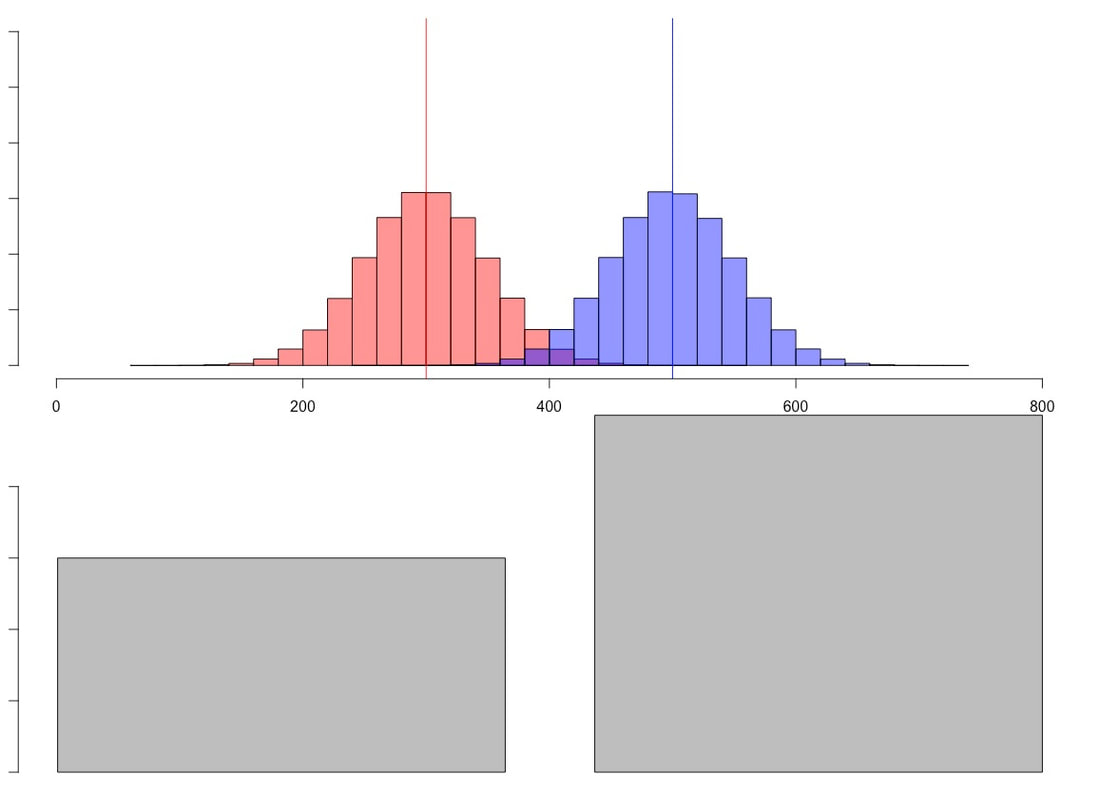

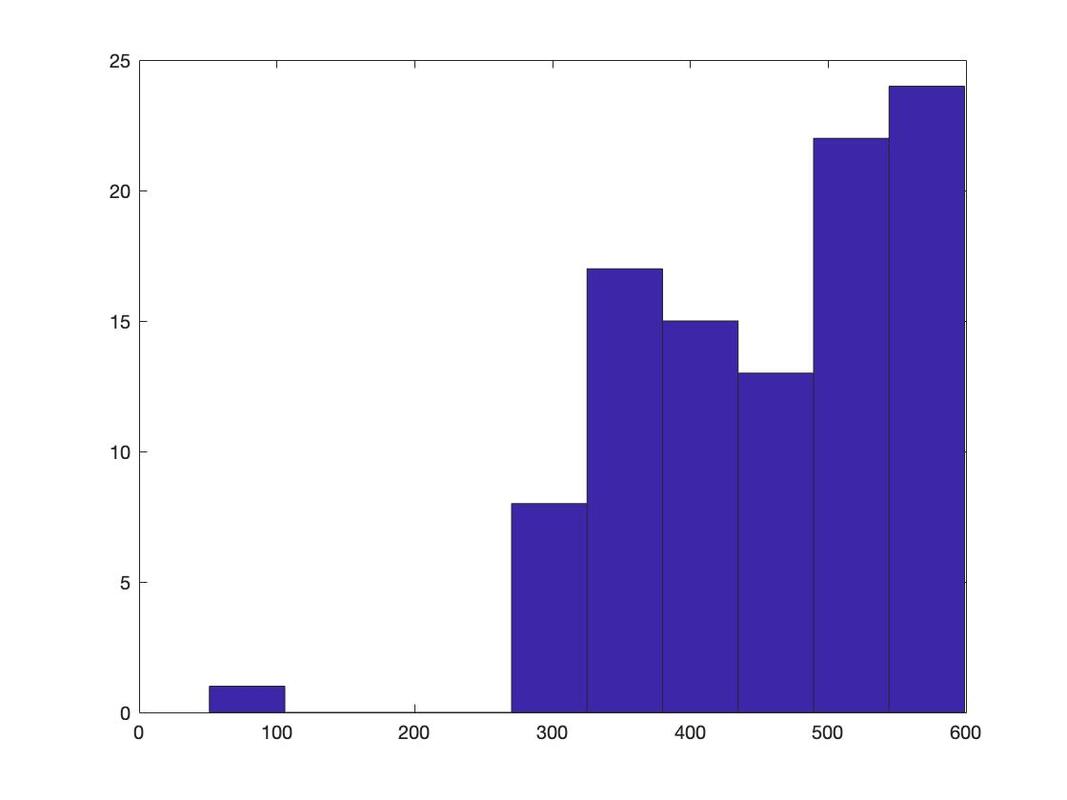

Here is a histogram of income. You can generate this yourself easily by using the command hist, e.g., hist(income$V1). Note, I have not bothered to rename the column so it has the default label V1 for variable one.

Okay, now load in a new data file, data3.txt which is HERE, into a data table called income. This file contains 10000 data points, each of which represents the income of a person in a Central American country in US dollars.

Here is a histogram of income. You can generate this yourself easily by using the command hist, e.g., hist(income$V1). Note, I have not bothered to rename the column so it has the default label V1 for variable one.

Note the shape of the data. We will data about distributions of data later, but this is what is called a skewed distribution.

Determine the mean and median of the income$V1 using mean(income$V1) and median(income$V1).

You should find that the mean is 10017 and the median is 9165. Note, to add the vertical lines I used the command abline(v = mean(income$V1), col = c("red")). and abline(v = median(income$V1), col = c("blue")).

First, you should review a full definition of a median, but it is essentially the score at the 50th percentile, or the middle of a distribution of numbers. A good definition of the median is HERE.

In terms of the data set income$V1, which is a better model for the data, the mean or the median? Think of it this way, if you moved to the country that this data is from, would you expect your income to be closer to $10017 or $9165? The correct answer in this case is $9165 given the distribution of the data. This is important to realize. A lot of time in statistics we become obsessed with using the mean as a model of our data. However, sometimes the median is a better model for your data - especially in cases like this where it is heavily skewed. Note, for normal data the mean and median should be very similar. Compare the mean and median for myData$rt. You should see that they are very close, differing by 2 points, 325.661 versus 327.7983.

I am not going to say much about the mode. Although the mode is frequently mentioned in statistics textbooks, I can honestly say in my 20+ years as a researcher I have never computed a mode other than when I teach statistics.

Logical Indexing

One of the reasons R is so powerful for statistics is that is supports logical indexing. What I mean by that, is that in our data set myData$rt, I have included codes (myData$group) to highlight that the reaction time data is from two groups of participants. So, if you type in mean(myData$rt) you get the mean across both groups of participants. However, if you wanted to simply get the mean of the myData$rt for participants in group one you would type mean(myData$rt[myData$group==1]). R will interpret this to give you the mean for myData$rt only for cases where the variable myData$group is 1.

JASP

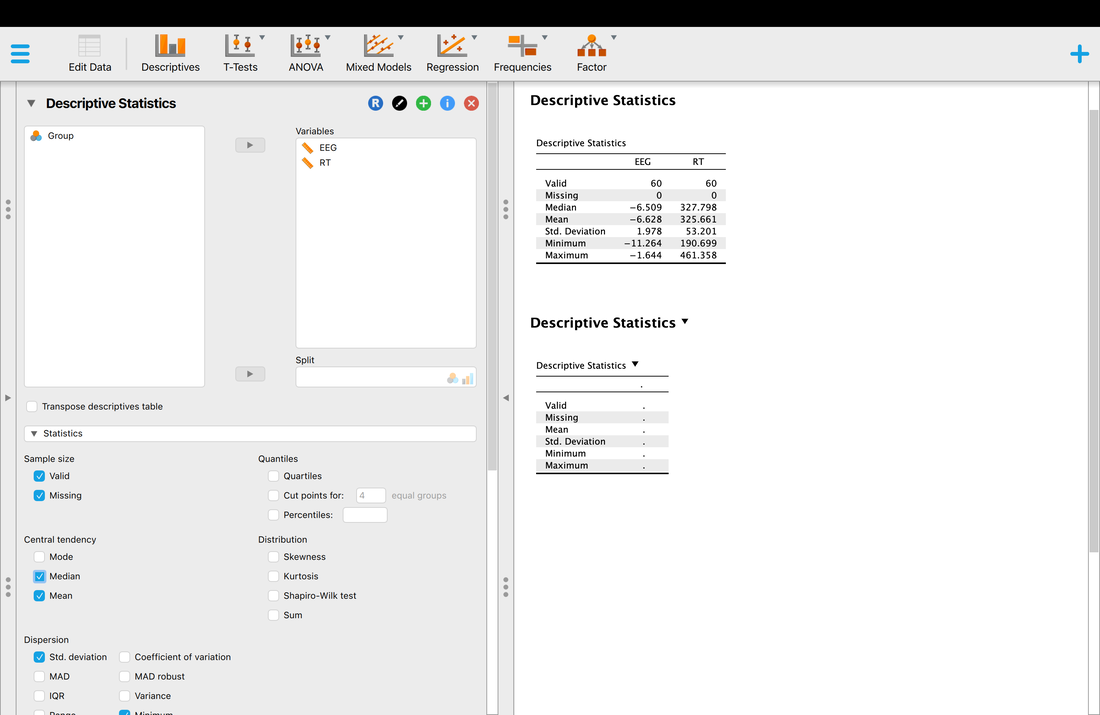

Computing descriptive statistics is quite easy. First, load your data into JASP. Make sure you use THIS data as it contains the column headers you need for JASP.

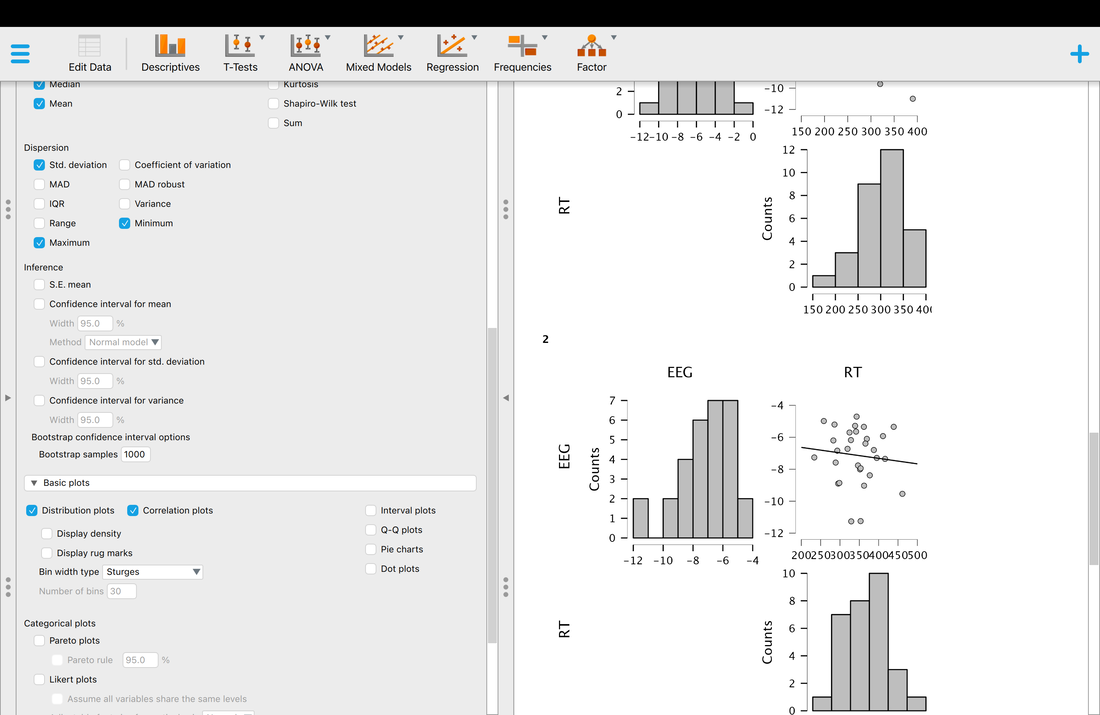

On the JASP home screen, click descriptives. You will have to select which variables you would like descriptives for. Select EEG and RT. You should see a screen that looks like the screen below. Note, the median is missing and is not computed by default. Simply click the arrow and expand the Statistics tab and select median as another statistic to compute.

Determine the mean and median of the income$V1 using mean(income$V1) and median(income$V1).

You should find that the mean is 10017 and the median is 9165. Note, to add the vertical lines I used the command abline(v = mean(income$V1), col = c("red")). and abline(v = median(income$V1), col = c("blue")).

First, you should review a full definition of a median, but it is essentially the score at the 50th percentile, or the middle of a distribution of numbers. A good definition of the median is HERE.

In terms of the data set income$V1, which is a better model for the data, the mean or the median? Think of it this way, if you moved to the country that this data is from, would you expect your income to be closer to $10017 or $9165? The correct answer in this case is $9165 given the distribution of the data. This is important to realize. A lot of time in statistics we become obsessed with using the mean as a model of our data. However, sometimes the median is a better model for your data - especially in cases like this where it is heavily skewed. Note, for normal data the mean and median should be very similar. Compare the mean and median for myData$rt. You should see that they are very close, differing by 2 points, 325.661 versus 327.7983.

I am not going to say much about the mode. Although the mode is frequently mentioned in statistics textbooks, I can honestly say in my 20+ years as a researcher I have never computed a mode other than when I teach statistics.

Logical Indexing

One of the reasons R is so powerful for statistics is that is supports logical indexing. What I mean by that, is that in our data set myData$rt, I have included codes (myData$group) to highlight that the reaction time data is from two groups of participants. So, if you type in mean(myData$rt) you get the mean across both groups of participants. However, if you wanted to simply get the mean of the myData$rt for participants in group one you would type mean(myData$rt[myData$group==1]). R will interpret this to give you the mean for myData$rt only for cases where the variable myData$group is 1.

JASP

Computing descriptive statistics is quite easy. First, load your data into JASP. Make sure you use THIS data as it contains the column headers you need for JASP.

On the JASP home screen, click descriptives. You will have to select which variables you would like descriptives for. Select EEG and RT. You should see a screen that looks like the screen below. Note, the median is missing and is not computed by default. Simply click the arrow and expand the Statistics tab and select median as another statistic to compute.

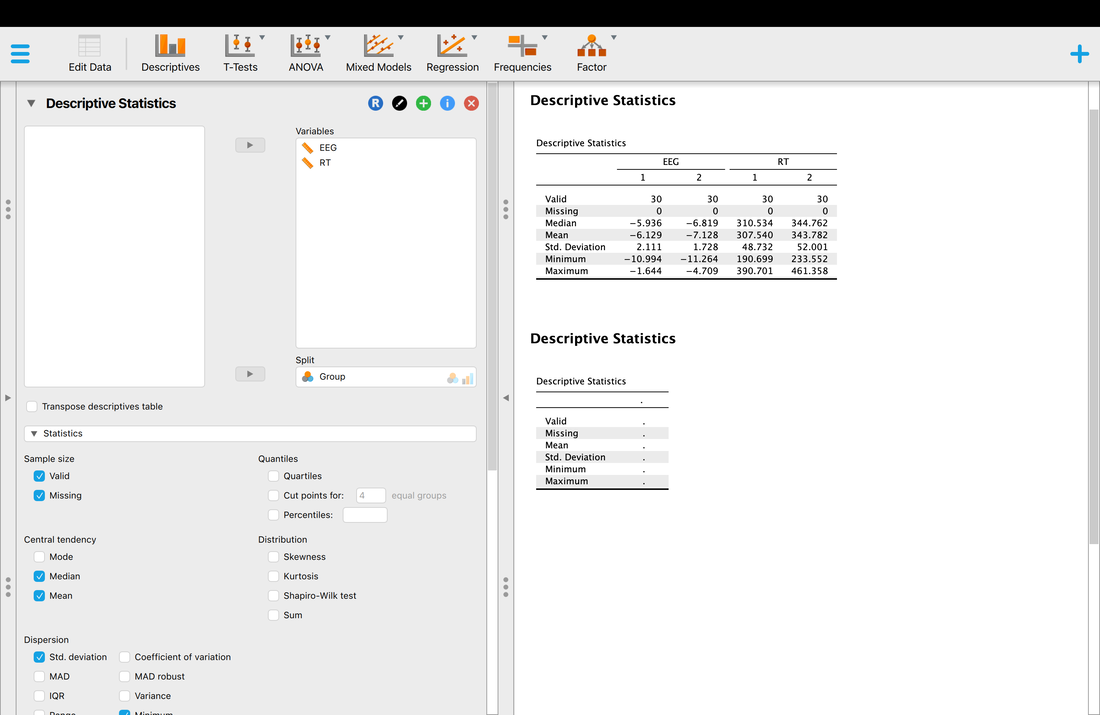

Okay, so you have your descriptive statistics for all of the data. What if you wanted them by group? Simply Enter Group as a variable into the Split box and you will have your descriptive statistics by Group.

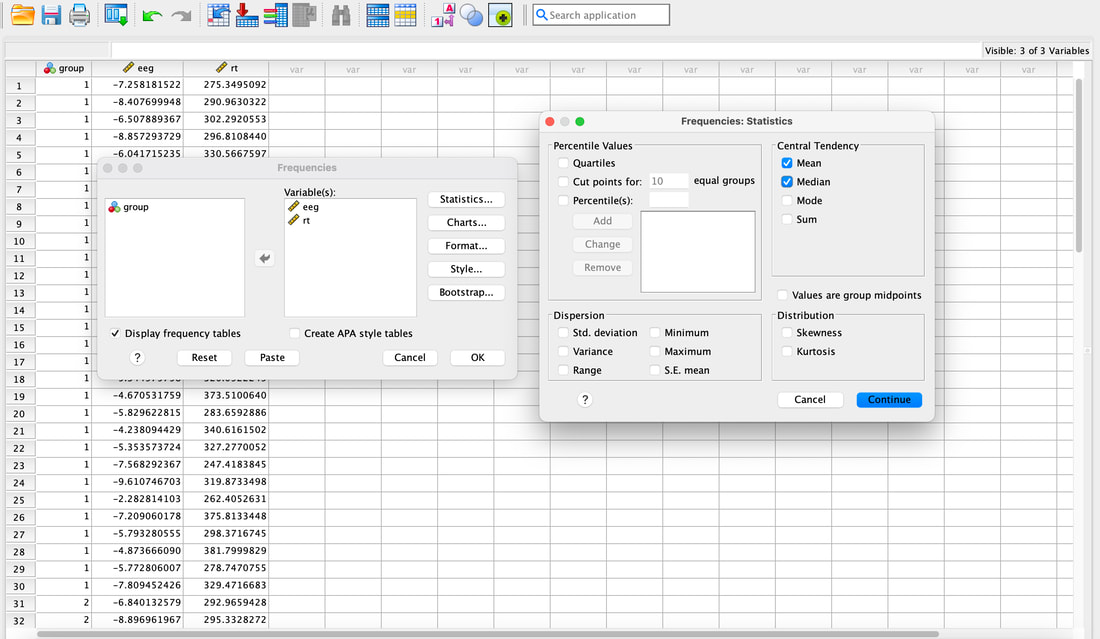

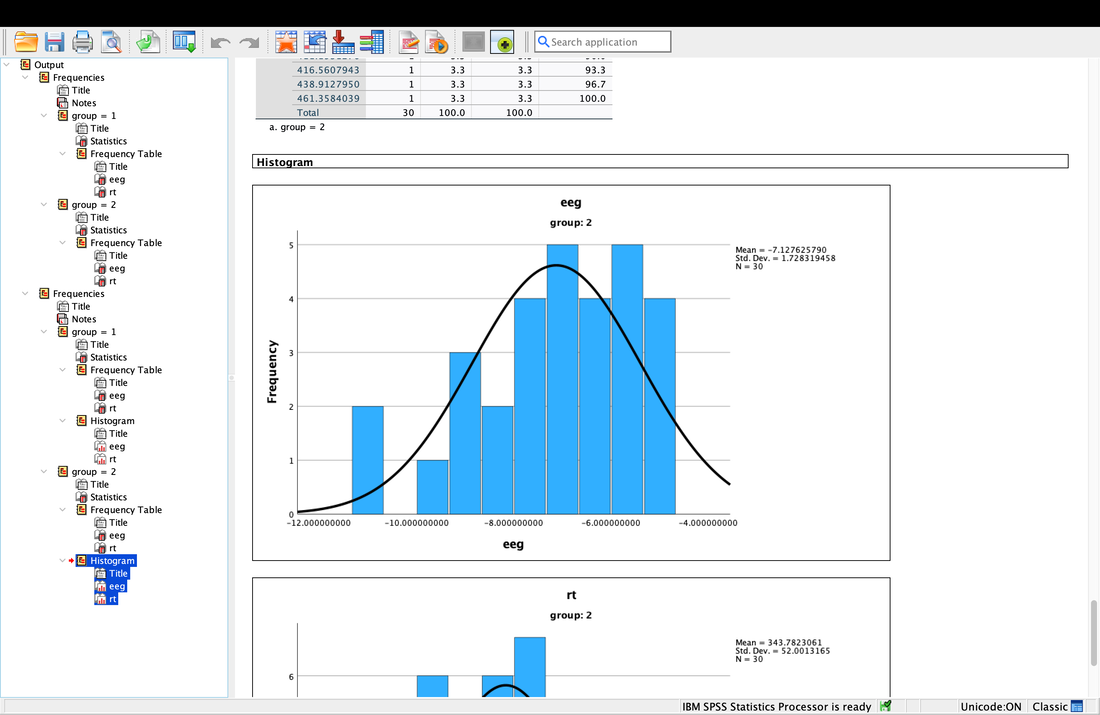

SPSS

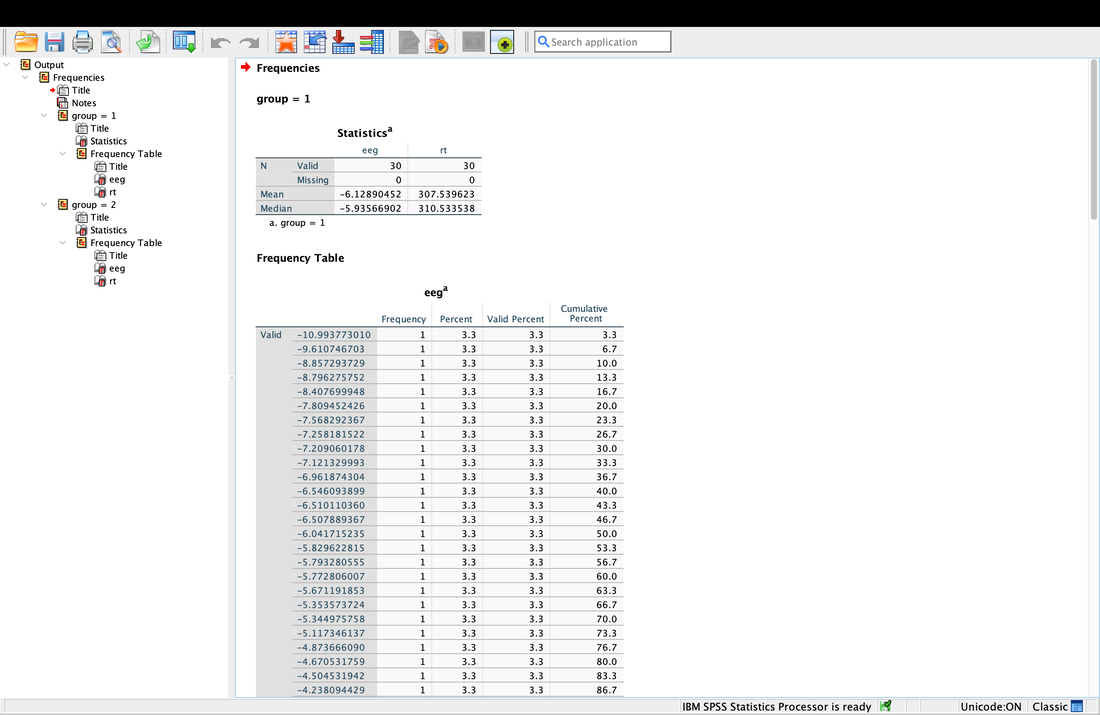

Computing descriptive statistics is easy in SPSS as well. Load data.txt into SPSS. If you click on Analyze --> Descriptive Statistics --> Frequencies. Enter eeg and rt (or V2 and V3 - I renamed the columns) as variables. Now, you can click on Statistics and select the Mean and the Median. Hit Continue then Okay and you will see the Mean and the Median.

Computing descriptive statistics is easy in SPSS as well. Load data.txt into SPSS. If you click on Analyze --> Descriptive Statistics --> Frequencies. Enter eeg and rt (or V2 and V3 - I renamed the columns) as variables. Now, you can click on Statistics and select the Mean and the Median. Hit Continue then Okay and you will see the Mean and the Median.

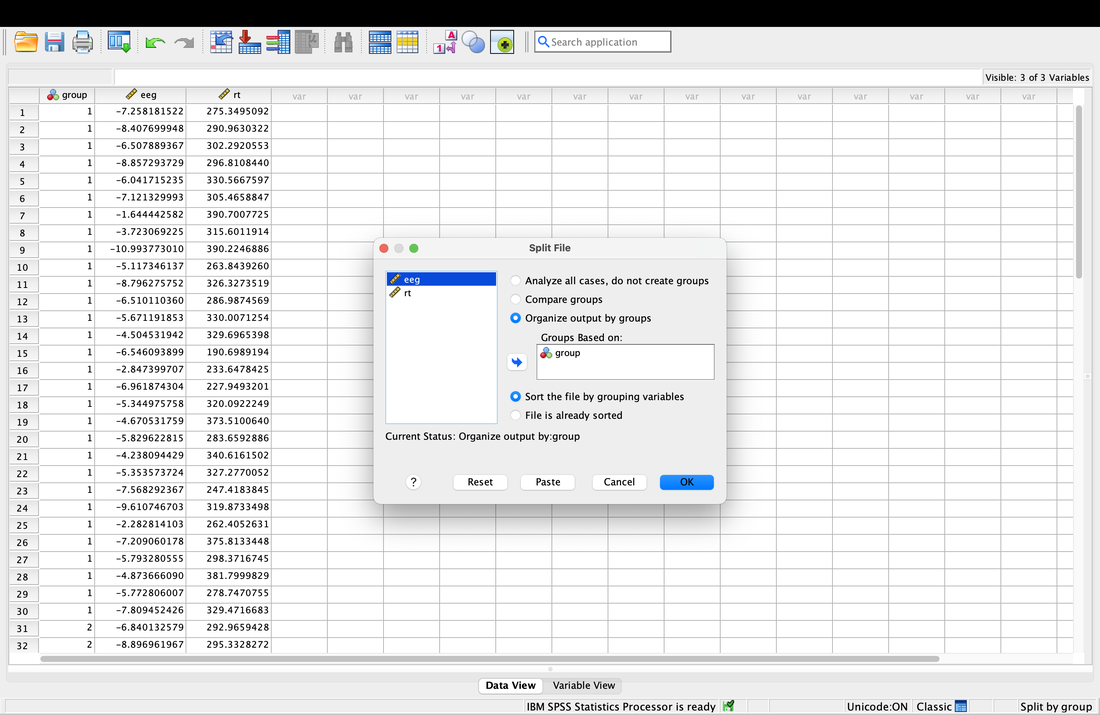

Getting descriptive statistics for groups separately in SPSS is somewhat annoying. Go to the Data tab then select Split File. This will allow you to then organize your output by Group as seen in the screenshot below. Then, repeat the steps above and you will see that you get separate descriptive statistics for each group.

Assignment 3

Your assignment for the week - compute the mean and median for groups 1 and 2 separately for both variables, myData$rt and myData$eeg. Use the plot command and the abline function to plot the mean and median for both variables (on the whole data set) as per the first plot on this page. Plot the mean in red and the median in blue. Email me your values and your plots.

Lesson 4. Variance

Reading: Field, Chapters 1 to 3

Today we are going to talk about VARIANCE. If you truly understand what variance is then you will be just fine with anything you do in statistics.

At some point before, during, or after class you should watch the following videos. First, watch THIS video. Then this ONE. And then this ONE. And finally this ONE.

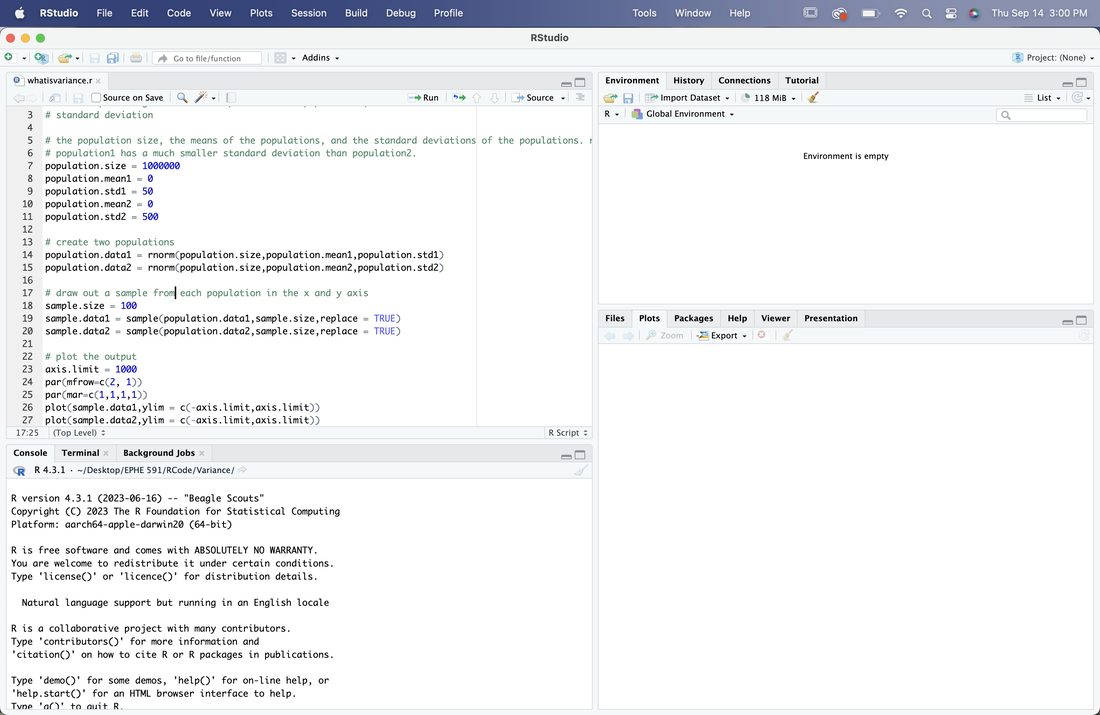

Now, R is easier to use when you just have to run scripts. A script is a bit of code that does several things sequentially.

Download the script HERE and put it in your R directory.

Open R Studio, set the working directory, and then File --> Open File and select whatisvariance.r, the script you just downloaded. Your screen should look something like this.

Today we are going to talk about VARIANCE. If you truly understand what variance is then you will be just fine with anything you do in statistics.

At some point before, during, or after class you should watch the following videos. First, watch THIS video. Then this ONE. And then this ONE. And finally this ONE.

Now, R is easier to use when you just have to run scripts. A script is a bit of code that does several things sequentially.

Download the script HERE and put it in your R directory.

Open R Studio, set the working directory, and then File --> Open File and select whatisvariance.r, the script you just downloaded. Your screen should look something like this.

The green writing after the # is a comment. These typically reflect information about the script. Now, most of this is commands you are not familiar with, which is fine. Read over the script and the comments. Then, click on the source button to run it. This will generate two plots. The plots reflect two samples drawn from two different populations with the same size and mean, but with a different standard deviation (variance). Change the standard deviations for each of the samples and run it again. What does this all mean?

Once you have done that, there are a series of R scripts below to run. Play with them and figure out what I am trying to teach you with them.

Sample Size and its Impact of Sample Means

Sample Size and its Impact on the Variance of Sample Means

Note for the next two scripts the x-axis is samples.

Sample Size and Variance

Another One on Sample Size and Variance

Now, some stuff to work through.

Load the file sampleData.txt into a table called "data" using read.table.

Check that the mean of column V1 is 298.16 and that the mean of column V2 is 350.79. Note that unless you rename the columns the default names are data$V1 and data$V2.

Take a look at the data using the View command. You will note that each of the numbers in the column is not 298.16 or 350.79, indeed, these numbers VARY quite a bit around the mean. Let's examine that variance by plotting data$V1 and data$V2. Try the following code:

par(mfrow=c(1,2)) this tells R you want to arrange two plots side by side, 1 row, 2 columns

plot(data$V1,ylim = c(250,400)) this will plot data$V1 on the left panel with y-axis limits of 250 and 400

plot(data$V2,ylim = c(250,400)) this will plot data$V2 on the right panel with y-axis limits of 250 and 400

Notice that the numbers are distributed about the mean values, in other words, there is VARIABILITY of these values around the mean.

Try the following command: data$V3 = data$V1 - mean(data$V1). This creates a new column data$V3 where each entry is just the corresponding value in data$V1 with the mean subtracted. What is the sum of this column? Hint: sum(data$V3). This will give you a really small number - in reality the sum should be zero but because of rounding error this never happens. Try plotting data$V3. You should see that is looks a lot like a plot of data$V1, however, the mean is now zero. This is of course, because you have subtracted the mean. The variability (data$V3) is simply the variability in scores about the mean. Recall that in the previous lesson we described the mean as a model. The mean is a model of the data, the best estimate of what the next score will be or of the data as a whole. However, you can also think of the data this way: data = model + variability, or data = mean + variability. Typically, in statistics, we use the word error instead of variability. The error is the difference of individual scores from the model.

As opposed to keeping all of the "error" scores, we may wish to describe how much variability there is around the mean. Create a new column, data$V4 using the following code: data$V4 = data$V3*data$V3. This column has a special name in statistics, it shows the squared errors from the mean. Importantly, this column does not sum to zero, it sums to a number (this is because there are no negative numbers once it is squared). The name for this term for a given set of data is the Sum of Squared Errors or SSE. If you divide this number by n-1 (the number of data points minus 1, so 99 for this data), you have a quantity called VARIANCE - the variance is a number that describes the variability in the data. For example, if everyone had the same score as the mean, the variance would be zero because the sum of squared errors would be zero. Compute the variance now: sum(data$V4)/99. We did not actually have to do all this work, usually we would take the short cut: var(data$V3). Note, the actual number we get for the variance only has meaning if you understand the data. For example, if I tell you a mean for a data set is 60 and the variance is 20 that does not mean a lot to you. However, if I told you that the mean of 60 was an age representing age of death and 20 was the variance, that is quite scary! Imagine what the distribution of age of death would look like! The mean of the variance also has relative meaning - you could compare the variance between two groups and that may be of interest to you.

Compute the variance for data$V2. I would recommend using the long approach we used above (by doing the math and creating new columns) and also by using the command var.

Standard Deviation

Sometimes researchers use a different quantity to represent the variability in a data set - the standard deviation. Mathematically, the standard deviation is just the square root of the variance: sqrt(var(data$V1)). There is also a simple command for it: sd(data$V1). This would be a really good time to find a textbook and read (a lot more) about variance and standard deviation.

Point versus Interval Estimates

If you recall, we discussed the mean and median as point estimates of the data. The standard deviation and variance are interval estimates of the data because they span a range of values. Ensure you understand this idea.

Sometimes statisticians like to create data to test ideas. In R this is very easy, for instance:

newData = rnorm(1000,300,25) creates a new data set called newData which has 1000 scores, a mean of 300, and a standard deviation of 25. We will need this later. But yes, I have just showed you how to create fake data.

Another way to examine variance is to use a histogram. Try the following command: hist(newData). Use a textbook and again read about histograms. Essentially, they show a breakdown of your data into bins (usually 10, but it can be any number), with a count of the number of data points that fall into the range of a given bin. If you try the following two commands: output = hist(newData) and then simply output, you should see a lot of information about what a histogram is comprised of. Make sure you know this information. NOTE. Your histogram is normally distributed, what some would call a "bell curve" or Gaussian. There are reasons for this and we will talk a lot more about normality later.

JASP and SPSS

To compute the standard deviation and variance in JASP or SPSS you would just compute descriptives as you did in Lesson 3 and select that you want to compute those two measures.

Similarly, to generate histograms in JASP you just select Basic Plots on the Descriptive tab and in SPSS you select Charts after you go Analyze --> Descriptive Statistics --> Frequencies.

Once you have done that, there are a series of R scripts below to run. Play with them and figure out what I am trying to teach you with them.

Sample Size and its Impact of Sample Means

Sample Size and its Impact on the Variance of Sample Means

Note for the next two scripts the x-axis is samples.

Sample Size and Variance

Another One on Sample Size and Variance

Now, some stuff to work through.

Load the file sampleData.txt into a table called "data" using read.table.

Check that the mean of column V1 is 298.16 and that the mean of column V2 is 350.79. Note that unless you rename the columns the default names are data$V1 and data$V2.

Take a look at the data using the View command. You will note that each of the numbers in the column is not 298.16 or 350.79, indeed, these numbers VARY quite a bit around the mean. Let's examine that variance by plotting data$V1 and data$V2. Try the following code:

par(mfrow=c(1,2)) this tells R you want to arrange two plots side by side, 1 row, 2 columns

plot(data$V1,ylim = c(250,400)) this will plot data$V1 on the left panel with y-axis limits of 250 and 400

plot(data$V2,ylim = c(250,400)) this will plot data$V2 on the right panel with y-axis limits of 250 and 400

Notice that the numbers are distributed about the mean values, in other words, there is VARIABILITY of these values around the mean.

Try the following command: data$V3 = data$V1 - mean(data$V1). This creates a new column data$V3 where each entry is just the corresponding value in data$V1 with the mean subtracted. What is the sum of this column? Hint: sum(data$V3). This will give you a really small number - in reality the sum should be zero but because of rounding error this never happens. Try plotting data$V3. You should see that is looks a lot like a plot of data$V1, however, the mean is now zero. This is of course, because you have subtracted the mean. The variability (data$V3) is simply the variability in scores about the mean. Recall that in the previous lesson we described the mean as a model. The mean is a model of the data, the best estimate of what the next score will be or of the data as a whole. However, you can also think of the data this way: data = model + variability, or data = mean + variability. Typically, in statistics, we use the word error instead of variability. The error is the difference of individual scores from the model.

As opposed to keeping all of the "error" scores, we may wish to describe how much variability there is around the mean. Create a new column, data$V4 using the following code: data$V4 = data$V3*data$V3. This column has a special name in statistics, it shows the squared errors from the mean. Importantly, this column does not sum to zero, it sums to a number (this is because there are no negative numbers once it is squared). The name for this term for a given set of data is the Sum of Squared Errors or SSE. If you divide this number by n-1 (the number of data points minus 1, so 99 for this data), you have a quantity called VARIANCE - the variance is a number that describes the variability in the data. For example, if everyone had the same score as the mean, the variance would be zero because the sum of squared errors would be zero. Compute the variance now: sum(data$V4)/99. We did not actually have to do all this work, usually we would take the short cut: var(data$V3). Note, the actual number we get for the variance only has meaning if you understand the data. For example, if I tell you a mean for a data set is 60 and the variance is 20 that does not mean a lot to you. However, if I told you that the mean of 60 was an age representing age of death and 20 was the variance, that is quite scary! Imagine what the distribution of age of death would look like! The mean of the variance also has relative meaning - you could compare the variance between two groups and that may be of interest to you.

Compute the variance for data$V2. I would recommend using the long approach we used above (by doing the math and creating new columns) and also by using the command var.

Standard Deviation

Sometimes researchers use a different quantity to represent the variability in a data set - the standard deviation. Mathematically, the standard deviation is just the square root of the variance: sqrt(var(data$V1)). There is also a simple command for it: sd(data$V1). This would be a really good time to find a textbook and read (a lot more) about variance and standard deviation.

Point versus Interval Estimates

If you recall, we discussed the mean and median as point estimates of the data. The standard deviation and variance are interval estimates of the data because they span a range of values. Ensure you understand this idea.

Sometimes statisticians like to create data to test ideas. In R this is very easy, for instance:

newData = rnorm(1000,300,25) creates a new data set called newData which has 1000 scores, a mean of 300, and a standard deviation of 25. We will need this later. But yes, I have just showed you how to create fake data.

Another way to examine variance is to use a histogram. Try the following command: hist(newData). Use a textbook and again read about histograms. Essentially, they show a breakdown of your data into bins (usually 10, but it can be any number), with a count of the number of data points that fall into the range of a given bin. If you try the following two commands: output = hist(newData) and then simply output, you should see a lot of information about what a histogram is comprised of. Make sure you know this information. NOTE. Your histogram is normally distributed, what some would call a "bell curve" or Gaussian. There are reasons for this and we will talk a lot more about normality later.

JASP and SPSS

To compute the standard deviation and variance in JASP or SPSS you would just compute descriptives as you did in Lesson 3 and select that you want to compute those two measures.

Similarly, to generate histograms in JASP you just select Basic Plots on the Descriptive tab and in SPSS you select Charts after you go Analyze --> Descriptive Statistics --> Frequencies.

Assignment 4

1. Question. Why is the sum of a column of numbers with the mean subtracted equal to zero?

2. Create three data sets, all of size 1000 and a mean of 500, but one with a standard deviation of 10, one with a standard deviation of 50, and the last with a standard deviation of 100.

3. Use the commands in this assignment to create a plot (which you will submit) that has 1 row, 3 columns and has a plots of the three data sets, Can you visually see the difference in the variance? HINT: Set your y limits to 0 and 1000 for both plots for this to work.

4. Question. Describe the increase in variability you see in words. Use a computation of the variance for each data set to justify your description.

5. For the data you created in Question 2, create a new variable called squaredErrors. This variable should have 6 columns, the first three containing your three columns of data, the next three each containing the squared errors (thus a 1000 row by 3 column matrix) for one of the columns. Compute the sum of squared errors for each of your columns and report these numbers. Also report the variance.

HINT. Creating a table from scratch in R is not as simple as you think. Here is one way to do this:

data1 = rnorm(1000,500,10)

data2 = rnorm(1000,500,50)

myTable = as.data.frame(matrix(data1))

# you have to create a table using as.data.frame and you have to specify the data is a matrix - trust me on this one.

myTable$V2 = data2

# then you just add your others variables as columns

6. Repeat Question 3, but using histograms (hist) instead of plots. For your comparison to be valid both the x and y axis limits have to be the same for all your plots! Use hist(myData$V1,xlim = c(1, 1000), ylim = c(1,300)) as a guide to help you with this. You do not need to redo the variance computations.

Submit your answers to me via email before next class.

Challenge: Do all of this in an R Script. You can answer written questions as comments and have code to do all the math and plots.

EXAM QUESTIONS

What are the mean, median, and mode?

What is mean by "The mean is a statistical model"? What are statistical models?

What is variance? What is standard deviation?

What is the difference between a point and an interval estimate?

For Next Class

Download a trial copy of GraphPad Prism HERE

1. Question. Why is the sum of a column of numbers with the mean subtracted equal to zero?

2. Create three data sets, all of size 1000 and a mean of 500, but one with a standard deviation of 10, one with a standard deviation of 50, and the last with a standard deviation of 100.

3. Use the commands in this assignment to create a plot (which you will submit) that has 1 row, 3 columns and has a plots of the three data sets, Can you visually see the difference in the variance? HINT: Set your y limits to 0 and 1000 for both plots for this to work.

4. Question. Describe the increase in variability you see in words. Use a computation of the variance for each data set to justify your description.

5. For the data you created in Question 2, create a new variable called squaredErrors. This variable should have 6 columns, the first three containing your three columns of data, the next three each containing the squared errors (thus a 1000 row by 3 column matrix) for one of the columns. Compute the sum of squared errors for each of your columns and report these numbers. Also report the variance.

HINT. Creating a table from scratch in R is not as simple as you think. Here is one way to do this:

data1 = rnorm(1000,500,10)

data2 = rnorm(1000,500,50)

myTable = as.data.frame(matrix(data1))

# you have to create a table using as.data.frame and you have to specify the data is a matrix - trust me on this one.

myTable$V2 = data2

# then you just add your others variables as columns

6. Repeat Question 3, but using histograms (hist) instead of plots. For your comparison to be valid both the x and y axis limits have to be the same for all your plots! Use hist(myData$V1,xlim = c(1, 1000), ylim = c(1,300)) as a guide to help you with this. You do not need to redo the variance computations.

Submit your answers to me via email before next class.

Challenge: Do all of this in an R Script. You can answer written questions as comments and have code to do all the math and plots.

EXAM QUESTIONS

What are the mean, median, and mode?

What is mean by "The mean is a statistical model"? What are statistical models?

What is variance? What is standard deviation?

What is the difference between a point and an interval estimate?

For Next Class

Download a trial copy of GraphPad Prism HERE

Lesson 5. Visualizing Data

Reading: Field, Chapter 4

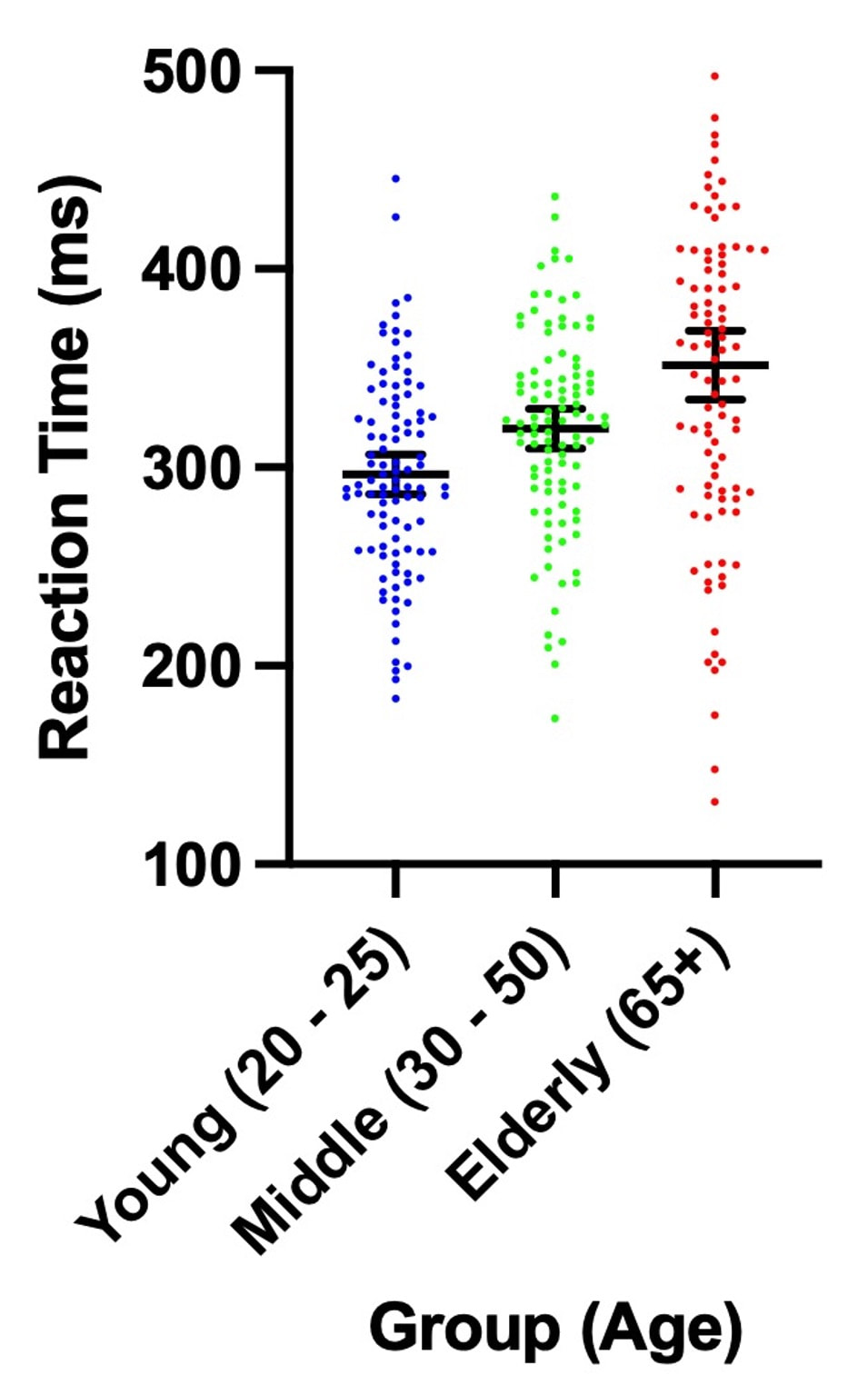

RULE # 1: DO NOT MAKE BORING PLOTS WITH LITTLE INFORMATION ON THEM, PUT AS MUCH INFORMATION (DATA) AS YOU CAN ON YOUR PLOTS

RULE # 2: NEVER PLOT WITH EXCEL

RULE # 3: NEVER FORGET RULES #1 AND #2

Consider the plot below, made with the R code HERE. The top plot conveys a lot of information. The bottom one, well, never do that...

RULE # 1: DO NOT MAKE BORING PLOTS WITH LITTLE INFORMATION ON THEM, PUT AS MUCH INFORMATION (DATA) AS YOU CAN ON YOUR PLOTS

RULE # 2: NEVER PLOT WITH EXCEL

RULE # 3: NEVER FORGET RULES #1 AND #2

Consider the plot below, made with the R code HERE. The top plot conveys a lot of information. The bottom one, well, never do that...

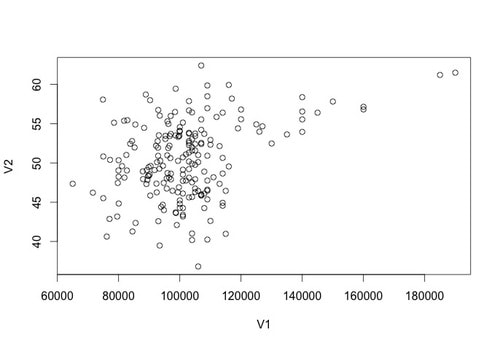

In terms of providing the viewer lots of information, the plots below contain the same point estimates, but the one on the right has so much more information!

Try reading this paper by Rougier et al. 2014 for some ideas!

The Grammar of Graphics is a MUST READ!

Need help with colour, check out THIS website!

And for some inspiration, look HERE!

Finally, in R you want to learn to use ggplot2 as soon as possible!

A Note on Error Bars

Plotting Basics

Load the data file HERE into a table called "data".

Next, name the three columns "subject", "condition", and "rt". You will note there are three groups of 50 participants in this data set.

Let's generate a basic scatter plot: plot(data$rt)

You will note this command generates a scatter plot of the values in data$rt with subject as the x axis value and rt as the y axis value.

If you wanted to represent this data as a bar plot you would simply use: barplot(data$rt)

For now, let's return to a basic scatter plot: plot(data$rt)

If you wanted to visualize the group differences, you could use a plot like this: plot(data$rt~data$condition)

This essentially tells R that you want a scatter plot of data$rt but instead of data$subject on the x axis you would like to use data$condition. Try plot(data$rt~data$subject) as a proof of concept. The default in R for the plot command is to assume the x axis reflects each data point if nothing else is specified. Note, this will not work for bar plots - you need to generate the means in advance. See below.

You can add other information to your plot. For example, if you want to label the plot axes in R you would do the following:

plot(data$rt~data$condition, main="Condition Effect", xlab="Condition", ylab="Reaction Time (ms)", ylim=c(0, 500))

Go HERE for a lot more information information about what you can add to plots.

We can make our graph even more sophisticated. Let's highlight the means for each group.

means = aggregate(rt~condition,data,mean)

This command gets the mean values for each condition in the table "data" and assigns them to the variable means. Type "means" to check.

Now try: points(means$condition,means$rt,pch=16,col='red')

You should see a red circle reflecting the mean in the middle of each scatter plot for condition.

A different way to visualize this data would be to plot each group separately using a line to reflect the mean as per a previous assignment. You would do this as follows:

par(mfrow=c(1,3))

plot(data$rt[data$condition==1])

abline(a = means$rt[1], b = 0, col = "red")

plot(data$rt[data$condition==2])

abline(a = means$rt[2], b = 0, col = "red")

plot(data$rt[data$condition==3])

abline(a = means$rt[3], b = 0, col = "red")

You will note the yaxis is different for each plot. You could fix this with ylim which we learned earlier.

More details on plotting in R can be found HERE and HERE.

GRAPHPAD PRISM

Showing you how to do all of this in GraphPad Prism is beyond the scope of this tutorial. However, if you open this Prism FILE then you will see how easy it is.

Assignment Question

1. Using a data set of your choice generate a plot similar to the one immediately above. Ensure you title the plot, label the axes, and make sure the x and y limits are the same on all of your sub plots.

Next, name the three columns "subject", "condition", and "rt". You will note there are three groups of 50 participants in this data set.

Let's generate a basic scatter plot: plot(data$rt)

You will note this command generates a scatter plot of the values in data$rt with subject as the x axis value and rt as the y axis value.

If you wanted to represent this data as a bar plot you would simply use: barplot(data$rt)

For now, let's return to a basic scatter plot: plot(data$rt)

If you wanted to visualize the group differences, you could use a plot like this: plot(data$rt~data$condition)

This essentially tells R that you want a scatter plot of data$rt but instead of data$subject on the x axis you would like to use data$condition. Try plot(data$rt~data$subject) as a proof of concept. The default in R for the plot command is to assume the x axis reflects each data point if nothing else is specified. Note, this will not work for bar plots - you need to generate the means in advance. See below.

You can add other information to your plot. For example, if you want to label the plot axes in R you would do the following:

plot(data$rt~data$condition, main="Condition Effect", xlab="Condition", ylab="Reaction Time (ms)", ylim=c(0, 500))

Go HERE for a lot more information information about what you can add to plots.

We can make our graph even more sophisticated. Let's highlight the means for each group.

means = aggregate(rt~condition,data,mean)

This command gets the mean values for each condition in the table "data" and assigns them to the variable means. Type "means" to check.

Now try: points(means$condition,means$rt,pch=16,col='red')

You should see a red circle reflecting the mean in the middle of each scatter plot for condition.

A different way to visualize this data would be to plot each group separately using a line to reflect the mean as per a previous assignment. You would do this as follows:

par(mfrow=c(1,3))

plot(data$rt[data$condition==1])

abline(a = means$rt[1], b = 0, col = "red")

plot(data$rt[data$condition==2])

abline(a = means$rt[2], b = 0, col = "red")

plot(data$rt[data$condition==3])

abline(a = means$rt[3], b = 0, col = "red")

You will note the yaxis is different for each plot. You could fix this with ylim which we learned earlier.

More details on plotting in R can be found HERE and HERE.

GRAPHPAD PRISM

Showing you how to do all of this in GraphPad Prism is beyond the scope of this tutorial. However, if you open this Prism FILE then you will see how easy it is.

Assignment Question

1. Using a data set of your choice generate a plot similar to the one immediately above. Ensure you title the plot, label the axes, and make sure the x and y limits are the same on all of your sub plots.

Bar Graphs

Now you will learn how to plot bar graphs in R. Load the data HERE into a table named data. Name the columns subject, group, and rt.

If you simply type in barplot(data$rt) you will get a bar plot of data$rt with a column representing each data point. There is a lot you can do with a bar plot in R, so lets work through some of the basics.

Let's say you wanted to colour the bars in green, you would first need the colour name in R. You can find a guide HERE.

Try this. barplot(data$rt, col = 'forestgreen')

Alternatively, use barplot(data$rt, col = 'forestgreen', border = NA) if you do not want borders on the bars.

If you wanted to color subsets of the bars, you would have to specify colors for each bar. You could do something like this:

cols = data$subject

cols[1:50] = c("red")

cols[51:100] = c("blue")

cols[101:150] = c("green")

barplot(data$rt, col = cols)

Some other useful features:

To label the x axis:

barplot(data$rt,xlab = 'Subject')

To label the y axis:

barplot(data$rt,ylab = 'Reaction Time (ms)')

To add a title:

barplot(data$rt,main = 'Insert Title Here')

To add a title and a subtitle:

barplot(data$rt,main = 'Insert Title Here', sub = 'My Subtitle')

To set x limits to the x axis:

barplot(data$rt, xlim = c(1, 50))

And to set y limits to the y axis:

barplot(data$rt, ylim = c(150, 550))

A full list of the parameters for barplot can be found HERE.

Plotting Means With Error Bars

A common thing you may want to do with this data is to generate a bar plot of the means of the three groups with an error bar for each column.

I could have a whole class on error bars, but it would not be that exciting. We will come back to error bars again and again, but the short version is from a statistics perspective the BEST error bar is a 95% confidence interval - a lot more on this later in the course. Other popular error bars are the standard deviation and the standard error of the mean.

This is a bit complicated but I want to plant the seed. Error bars should reflect the variance that is important to the comparison. For BETWEEN designs the standard deviation is appropriate for each group, because the variance within the group is what impacts the test. For a WITHIN design, variance of the conditions does not matter. But, the standard deviation of the difference scores does. Why do you think this is?

Now, let's make some error bars. For now, I am just going to show you how to compute a 95% CI. We will use these more later in the course and talk about what they mean. For now, if you are curious watch THIS and THIS.

Note, I have renamed the column names using:

names(myData) = c("subject","condition","rt")

Step One. Get the mean for each group:

means = aggregate(rt~condition,data,mean)

N.B., for simplicities sake, I am going to remove the means from the mini data frame they have been put in:

means = means$rt

Step Two. Get the standard deviation for each group.

sds = aggregate(rt~condition,data,sd)

sds = sds$rt(same removal of the data frame here)

Step Three. Compute the CI size for each group.

cis = abs(qt(0.05,49) * sds / sqrt(50))

Note, if you want to know what these commands do use ?abs, ?qt, and ?sqrt.

Step Four. Plot the bar plot of the means.

bp = barplot(means, ylim = c(200,400))

Note, we are assigning the barplot a name, bp, so we can use it below.

Step Five. Add the error bars.

arrows(bp,means-cis, bp, means+cis, angle=90, code=3, length=0.5)

This is actually a quick R cheat using the arrows command, but it works! Essentially, you are specifying arrows draw between the point (bp,means-cis) and point (bp,means+cis) with the arrows heads at an angle of 90 degrees, on each end of the line (code = 3) and with a length of 0.5. If you want to get serious about plotting, use a plotting program or see some of the more advanced lessons!

GRAPHPAD PRISM

Showing you how to do all of this in GraphPad Prism is beyond the scope of this tutorial. However, if you open this Prism FILE then you will see how easy it is.

Assignment Question

2. Using a data set of your choice generate a boxplot similar to the one immediately above. Ensure you title the plot, label the axes, and make sure the x and y limits are appropriate.

If you simply type in barplot(data$rt) you will get a bar plot of data$rt with a column representing each data point. There is a lot you can do with a bar plot in R, so lets work through some of the basics.

Let's say you wanted to colour the bars in green, you would first need the colour name in R. You can find a guide HERE.

Try this. barplot(data$rt, col = 'forestgreen')

Alternatively, use barplot(data$rt, col = 'forestgreen', border = NA) if you do not want borders on the bars.

If you wanted to color subsets of the bars, you would have to specify colors for each bar. You could do something like this:

cols = data$subject

cols[1:50] = c("red")

cols[51:100] = c("blue")

cols[101:150] = c("green")

barplot(data$rt, col = cols)

Some other useful features:

To label the x axis:

barplot(data$rt,xlab = 'Subject')

To label the y axis:

barplot(data$rt,ylab = 'Reaction Time (ms)')

To add a title:

barplot(data$rt,main = 'Insert Title Here')

To add a title and a subtitle:

barplot(data$rt,main = 'Insert Title Here', sub = 'My Subtitle')

To set x limits to the x axis:

barplot(data$rt, xlim = c(1, 50))

And to set y limits to the y axis:

barplot(data$rt, ylim = c(150, 550))

A full list of the parameters for barplot can be found HERE.

Plotting Means With Error Bars

A common thing you may want to do with this data is to generate a bar plot of the means of the three groups with an error bar for each column.

I could have a whole class on error bars, but it would not be that exciting. We will come back to error bars again and again, but the short version is from a statistics perspective the BEST error bar is a 95% confidence interval - a lot more on this later in the course. Other popular error bars are the standard deviation and the standard error of the mean.

This is a bit complicated but I want to plant the seed. Error bars should reflect the variance that is important to the comparison. For BETWEEN designs the standard deviation is appropriate for each group, because the variance within the group is what impacts the test. For a WITHIN design, variance of the conditions does not matter. But, the standard deviation of the difference scores does. Why do you think this is?

Now, let's make some error bars. For now, I am just going to show you how to compute a 95% CI. We will use these more later in the course and talk about what they mean. For now, if you are curious watch THIS and THIS.

Note, I have renamed the column names using:

names(myData) = c("subject","condition","rt")

Step One. Get the mean for each group:

means = aggregate(rt~condition,data,mean)

N.B., for simplicities sake, I am going to remove the means from the mini data frame they have been put in:

means = means$rt

Step Two. Get the standard deviation for each group.

sds = aggregate(rt~condition,data,sd)

sds = sds$rt(same removal of the data frame here)

Step Three. Compute the CI size for each group.

cis = abs(qt(0.05,49) * sds / sqrt(50))

Note, if you want to know what these commands do use ?abs, ?qt, and ?sqrt.

Step Four. Plot the bar plot of the means.

bp = barplot(means, ylim = c(200,400))

Note, we are assigning the barplot a name, bp, so we can use it below.

Step Five. Add the error bars.

arrows(bp,means-cis, bp, means+cis, angle=90, code=3, length=0.5)

This is actually a quick R cheat using the arrows command, but it works! Essentially, you are specifying arrows draw between the point (bp,means-cis) and point (bp,means+cis) with the arrows heads at an angle of 90 degrees, on each end of the line (code = 3) and with a length of 0.5. If you want to get serious about plotting, use a plotting program or see some of the more advanced lessons!

GRAPHPAD PRISM

Showing you how to do all of this in GraphPad Prism is beyond the scope of this tutorial. However, if you open this Prism FILE then you will see how easy it is.

Assignment Question

2. Using a data set of your choice generate a boxplot similar to the one immediately above. Ensure you title the plot, label the axes, and make sure the x and y limits are appropriate.

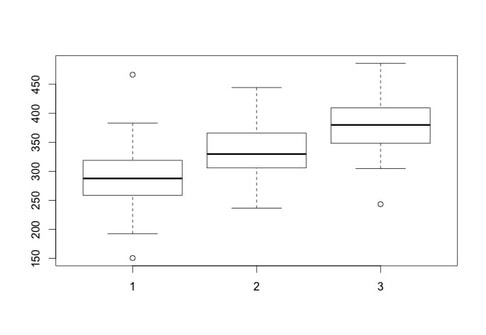

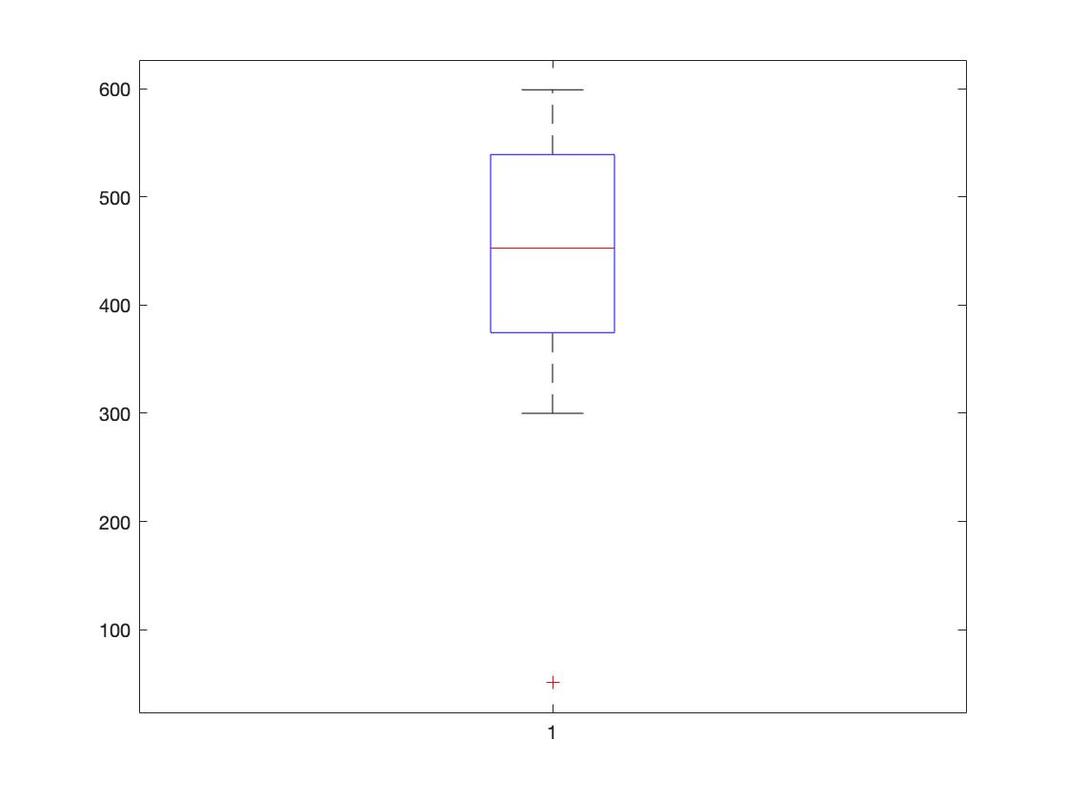

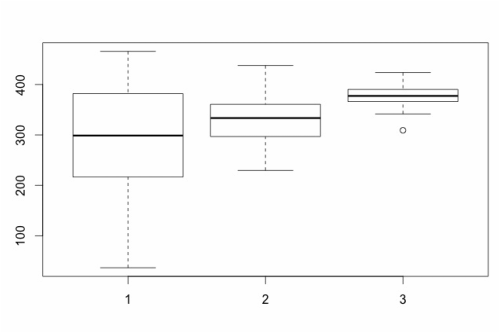

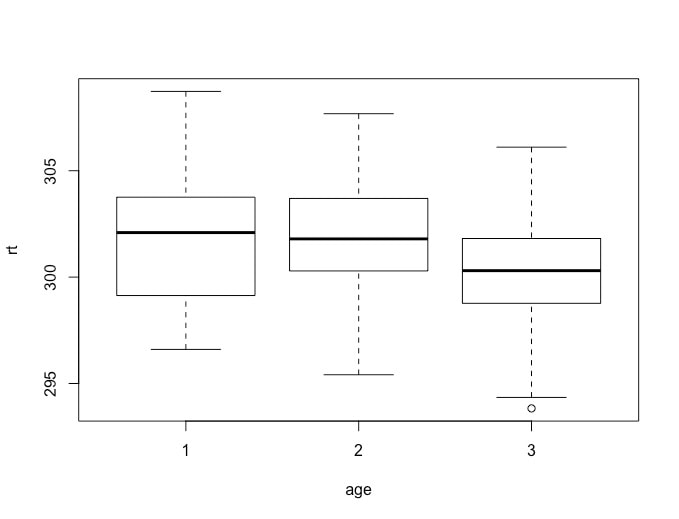

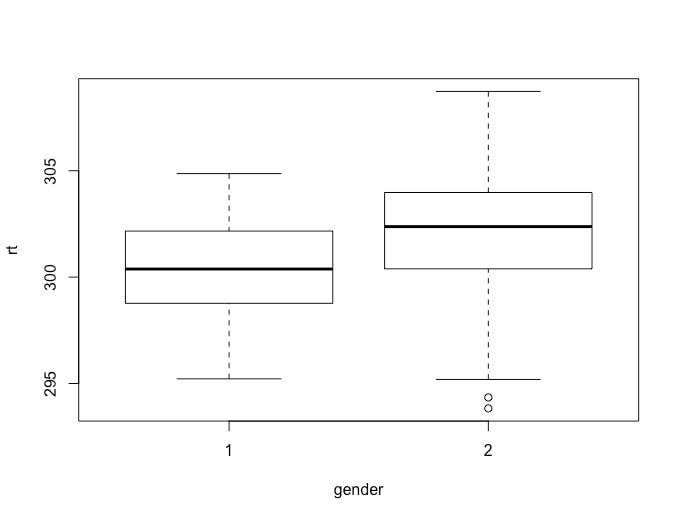

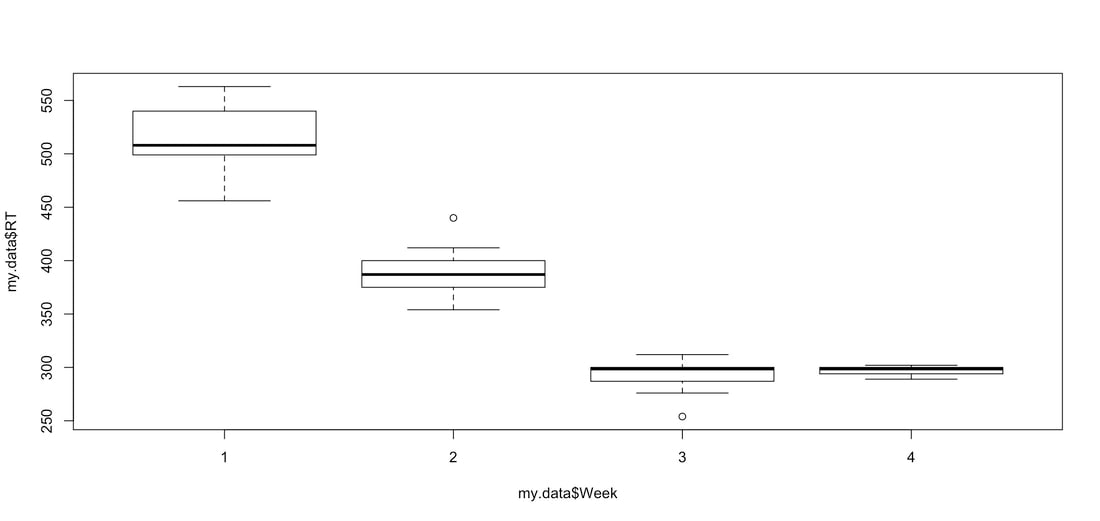

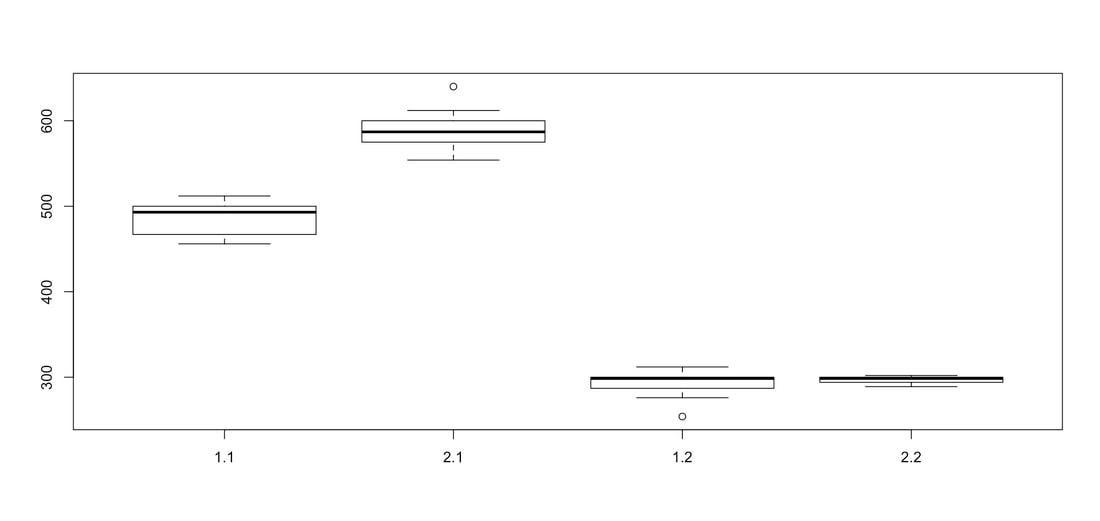

Boxplots

There are other plots in R that can be very useful - a classic one for examining data is a box plot.

In this lesson you will learn how to plot bar graphs in R. Load the data HERE into a table named data. Name the columns subject, group, and rt.

Generating a boxplot if very easy. Simply use:

boxplot(data$rt~data$group)

Now, what is important with a box plot is what information is shown. The line in the middle of each "box" is the median score for the group. The bottom of the box represents the first quartile (or 25th percentile) score. The top of the box represents the third quartile (or 75th percentile) score. The bottom and the top of the "error bars" represent the minimum and maximum scores respectively. Note, these are not the mathematical minimum and maximum but the boxplot minimum and maximum. To understand these scores, we must define the interquartile range (IQR). This is the range from the 25th percentile score to the 75th percentile score - the height of the box itself. The minimum is Q1 (the 25th percentile score) - 1.5 x IQR. The maximum is Q3 (the 75th percentile score) + 1.5 * IQR.

What of the circles? The circles represent outlying scores - these are scores that the function generating the box plot decides are too extreme to actually be in the group. Let's verify this quickly with the first group:

group1 = data$rt[data$group == 1]

median(group1)

min(group1)

max(group1)

You will note the values returned for min and max are the circles shown on the boxplot as these are the true mathematical minimum and maximum scores.

If you want to see what the numbers the box plot is computing - try this:

stuff = boxplot(data$rt~data$group)

stuff

What you should see here is that the box plot is returning all of the information in the plot and more - even the confidence intervals for the groups.

For a list of all the properties of a boxplots, use

?boxplot

Note, all of the classic plot properties such as titles, axis labels, and axis limits can be set.

GRAPHPAD PRISM

Showing you how to do all of this in GraphPad Prism is beyond the scope of this tutorial. However, if you open this Prism FILE then you will see how easy it is.

Assignment Question

3. Using a data set of your choice generate a boxplot similar to the one immediately above. Ensure you title the plot, label the axes, and make sure the x and y limits are appropriate.

In this lesson you will learn how to plot bar graphs in R. Load the data HERE into a table named data. Name the columns subject, group, and rt.

Generating a boxplot if very easy. Simply use:

boxplot(data$rt~data$group)

Now, what is important with a box plot is what information is shown. The line in the middle of each "box" is the median score for the group. The bottom of the box represents the first quartile (or 25th percentile) score. The top of the box represents the third quartile (or 75th percentile) score. The bottom and the top of the "error bars" represent the minimum and maximum scores respectively. Note, these are not the mathematical minimum and maximum but the boxplot minimum and maximum. To understand these scores, we must define the interquartile range (IQR). This is the range from the 25th percentile score to the 75th percentile score - the height of the box itself. The minimum is Q1 (the 25th percentile score) - 1.5 x IQR. The maximum is Q3 (the 75th percentile score) + 1.5 * IQR.

What of the circles? The circles represent outlying scores - these are scores that the function generating the box plot decides are too extreme to actually be in the group. Let's verify this quickly with the first group:

group1 = data$rt[data$group == 1]

median(group1)

min(group1)

max(group1)

You will note the values returned for min and max are the circles shown on the boxplot as these are the true mathematical minimum and maximum scores.

If you want to see what the numbers the box plot is computing - try this:

stuff = boxplot(data$rt~data$group)

stuff

What you should see here is that the box plot is returning all of the information in the plot and more - even the confidence intervals for the groups.

For a list of all the properties of a boxplots, use

?boxplot

Note, all of the classic plot properties such as titles, axis labels, and axis limits can be set.

GRAPHPAD PRISM

Showing you how to do all of this in GraphPad Prism is beyond the scope of this tutorial. However, if you open this Prism FILE then you will see how easy it is.

Assignment Question

3. Using a data set of your choice generate a boxplot similar to the one immediately above. Ensure you title the plot, label the axes, and make sure the x and y limits are appropriate.

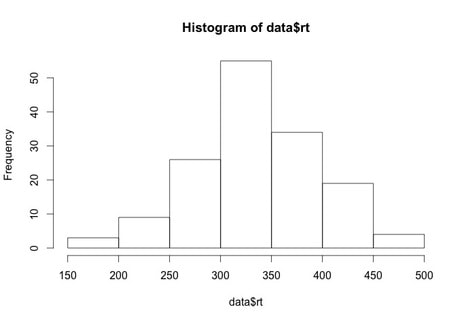

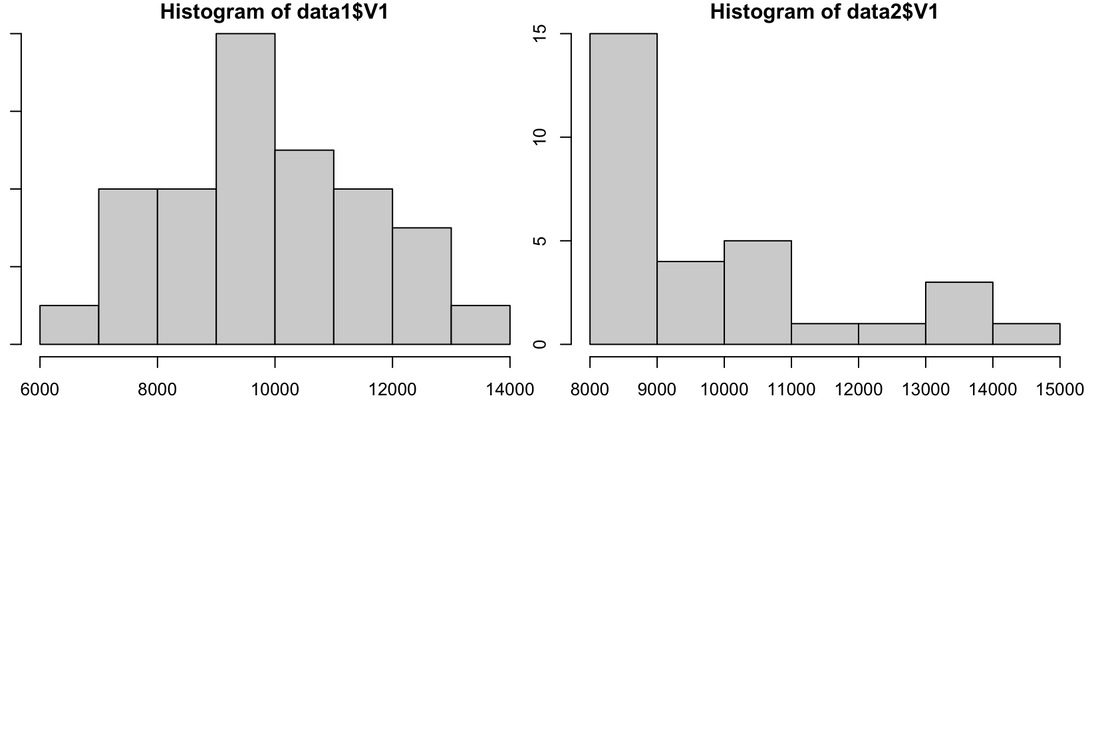

Histograms

Again, we will work with the same data as before. Load the data HERE into a table named data. Name the columns subject, group, and rt.

Generating a histogram is very easy in R. Try the following:

hist(data$rt)

What you will see is a simply histogram like this.

Histograms provide an excellent representation of your data. For example, you could use something like the bar plot we generated a few exercises ago or you could show something like this:

hist(data$rt[data$group==1],col=rgb(1,0,0,0.5),ylim = c(0,25))

hist(data$rt[data$group==3],col=rgb(0,0,1,0.5),ylim = c(0,25),add=T)

Now this plot only overlays groups 1 and 3 but you get the idea - there is a lot more information here than a simple bar plot.

You can also pull information out of a histogram. Try:

stuff = hist(data$rt)

stuff

As with the boxplot command, you will see that the histogram generates a lot of statistical information.

For a list of all the properties of a histogram, use

?hist

Note, all of the classic plot properties such as titles, axis labels, and axis limits can be set.

GRAPHPAD PRISM

Showing you how to do all of this in GraphPad Prism is beyond the scope of this tutorial. However, if you open this Prism FILE then you will see how easy it is.

Assignment Question

4. Using a data set of your choice generate a histogram where you overlay three data sets. To make your life easier... make sure they do not overlap too much!

Generating a histogram is very easy in R. Try the following:

hist(data$rt)

What you will see is a simply histogram like this.

Histograms provide an excellent representation of your data. For example, you could use something like the bar plot we generated a few exercises ago or you could show something like this:

hist(data$rt[data$group==1],col=rgb(1,0,0,0.5),ylim = c(0,25))

hist(data$rt[data$group==3],col=rgb(0,0,1,0.5),ylim = c(0,25),add=T)

Now this plot only overlays groups 1 and 3 but you get the idea - there is a lot more information here than a simple bar plot.

You can also pull information out of a histogram. Try:

stuff = hist(data$rt)

stuff

As with the boxplot command, you will see that the histogram generates a lot of statistical information.

For a list of all the properties of a histogram, use

?hist

Note, all of the classic plot properties such as titles, axis labels, and axis limits can be set.

GRAPHPAD PRISM

Showing you how to do all of this in GraphPad Prism is beyond the scope of this tutorial. However, if you open this Prism FILE then you will see how easy it is.

Assignment Question

4. Using a data set of your choice generate a histogram where you overlay three data sets. To make your life easier... make sure they do not overlap too much!

OPTIONAL: ggplot: Bar Graphs

In this section, we are going to learn to plot a bar graph using a package called ggplot2. This package is very popular for R users and that is because it allows you to customize anything on your graph. Start off by installing the package ggplot2 as you have been shown in a past section.

Now, ggplot requires a long format of data rather than a wide format. A wide format is where columns are different conditions and rows are each participant. A long format will instead compile it so that the first column names the conditions, and the second column names the score. This means that if you have 20 people and 3 conditions, the first 20 rows will be the scores for condition 1, the second 20 rows will be condition 2 and so forth. This seems simple right now, but when you have multiple predictors, things begin to get complicated. Let’s say you have two time points, and three conditions per time point. That means that for each participant you need Time1*Condition1, Time2*Condition1, Time1*Condition2, Time2*Condition2, Time1*Condition3, and Time2*Condition3. Luckily, you do not have to arrange your data in this way. Instead, we will use a package called reshape2 which will compile your wide format data into long format data. Install the reshape2 package now.

Unlike past sections, I will not here walk you through each part of the code. The reason for this is that this code is much more elaborate than what you’ve used before. Instead, I have decided to give you working code which has extensive commenting. I suggest you read the comments thoroughly, but also play around with it. The best way to learn what every line of code does is by playing with it. See what happens if you delete a line, or if you change a value. If you don’t do these things, you will not truly understand what everything does.

HERE is the data you will need for this tutorial.

HERE is the code you will need for this tutorial.

Now, load the script into R and the tutorial will continue there.

Now, ggplot requires a long format of data rather than a wide format. A wide format is where columns are different conditions and rows are each participant. A long format will instead compile it so that the first column names the conditions, and the second column names the score. This means that if you have 20 people and 3 conditions, the first 20 rows will be the scores for condition 1, the second 20 rows will be condition 2 and so forth. This seems simple right now, but when you have multiple predictors, things begin to get complicated. Let’s say you have two time points, and three conditions per time point. That means that for each participant you need Time1*Condition1, Time2*Condition1, Time1*Condition2, Time2*Condition2, Time1*Condition3, and Time2*Condition3. Luckily, you do not have to arrange your data in this way. Instead, we will use a package called reshape2 which will compile your wide format data into long format data. Install the reshape2 package now.

Unlike past sections, I will not here walk you through each part of the code. The reason for this is that this code is much more elaborate than what you’ve used before. Instead, I have decided to give you working code which has extensive commenting. I suggest you read the comments thoroughly, but also play around with it. The best way to learn what every line of code does is by playing with it. See what happens if you delete a line, or if you change a value. If you don’t do these things, you will not truly understand what everything does.

HERE is the data you will need for this tutorial.

HERE is the code you will need for this tutorial.

Now, load the script into R and the tutorial will continue there.

OPTIONAL: ggplot: Grouped Bar Graphs

This tutorial requires the ggplot2 and the reshape2 packages. Importantly, the last tutorial, ‘Bar Plot with GGPlot’ walks you through what wide and long format data is, so make sure that you have read this information to better understand what we are doing here.

Grouped bar plots are a way to visualize factorial data. What we are going to do in this tutorial is present data with 2 factors. One factor is feedback with two levels (win, loss) and the other factor is frequency with four levels (Delta, Theta, Alpha, Beta). What we are going to do is display the win and loss feedback next to each other for all levels of frequency. This way we can more easily compare this data.

Similar to the last tutorial, I will not here walk you through each part of the code. The reason for this is that this code is much more elaborate than what you’ve used before. Instead, I have decided to give you working code which has extensive commenting. I suggest you read the comments thoroughly, but also play around with it. The best way to learn what every line of code does is by playing with it. See what happens if you delete a line, or if you change a value. If you don’t do these things, you will not truly understand what everything does.

HERE is the data you will need for this tutorial.

HERE is the code you will need for this tutorial.

Now, load the script into R and the tutorial will continue there.

Grouped bar plots are a way to visualize factorial data. What we are going to do in this tutorial is present data with 2 factors. One factor is feedback with two levels (win, loss) and the other factor is frequency with four levels (Delta, Theta, Alpha, Beta). What we are going to do is display the win and loss feedback next to each other for all levels of frequency. This way we can more easily compare this data.

Similar to the last tutorial, I will not here walk you through each part of the code. The reason for this is that this code is much more elaborate than what you’ve used before. Instead, I have decided to give you working code which has extensive commenting. I suggest you read the comments thoroughly, but also play around with it. The best way to learn what every line of code does is by playing with it. See what happens if you delete a line, or if you change a value. If you don’t do these things, you will not truly understand what everything does.

HERE is the data you will need for this tutorial.

HERE is the code you will need for this tutorial.

Now, load the script into R and the tutorial will continue there.

OPTIONAL: ggplot: Line Graphs

This tutorial requires the ggplot2 and the reshape2 packages. Importantly, a past tutorial, ‘Bar Plot with GGPlot’ walks you through what wide and long format data is, so make sure that you have read this information to better understand what we are doing here.

Line plots are appealing ways to present continuous data. This can include one or more levels (lines). Here, we will be presenting two lines of data. This tutorial will show you how to make line plots, however, it is formatted for ERP data. Within the script, there are comments that point out where some of the code may be removed if you are not presenting ERP data.

Similar to the last tutorial, I will not here walk you through each part of the code. The reason for this is that this code is much more elaborate than what you’ve used before. Instead, I have decided to give you working code which has extensive commenting. I suggest you read the comments thoroughly, but also play around with it. The best way to learn what every line of code does is by playing with it. See what happens if you delete a line, or if you change a value. If you don’t do these things, you will not truly understand what everything does.

HERE is the data you will need for this tutorial.

HERE is the code you will need for this tutorial.

Now, load the script into R and the tutorial will continue there.

Line plots are appealing ways to present continuous data. This can include one or more levels (lines). Here, we will be presenting two lines of data. This tutorial will show you how to make line plots, however, it is formatted for ERP data. Within the script, there are comments that point out where some of the code may be removed if you are not presenting ERP data.

Similar to the last tutorial, I will not here walk you through each part of the code. The reason for this is that this code is much more elaborate than what you’ve used before. Instead, I have decided to give you working code which has extensive commenting. I suggest you read the comments thoroughly, but also play around with it. The best way to learn what every line of code does is by playing with it. See what happens if you delete a line, or if you change a value. If you don’t do these things, you will not truly understand what everything does.

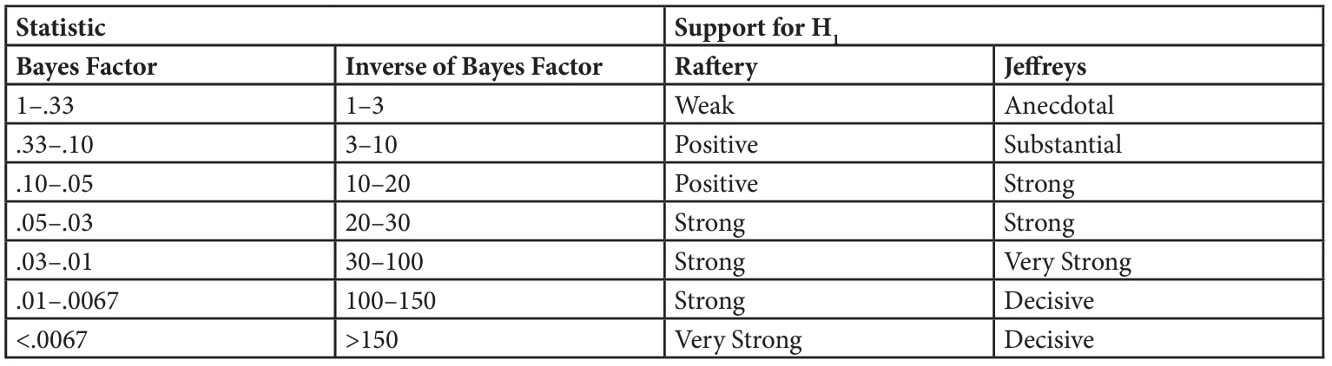

HERE is the data you will need for this tutorial.